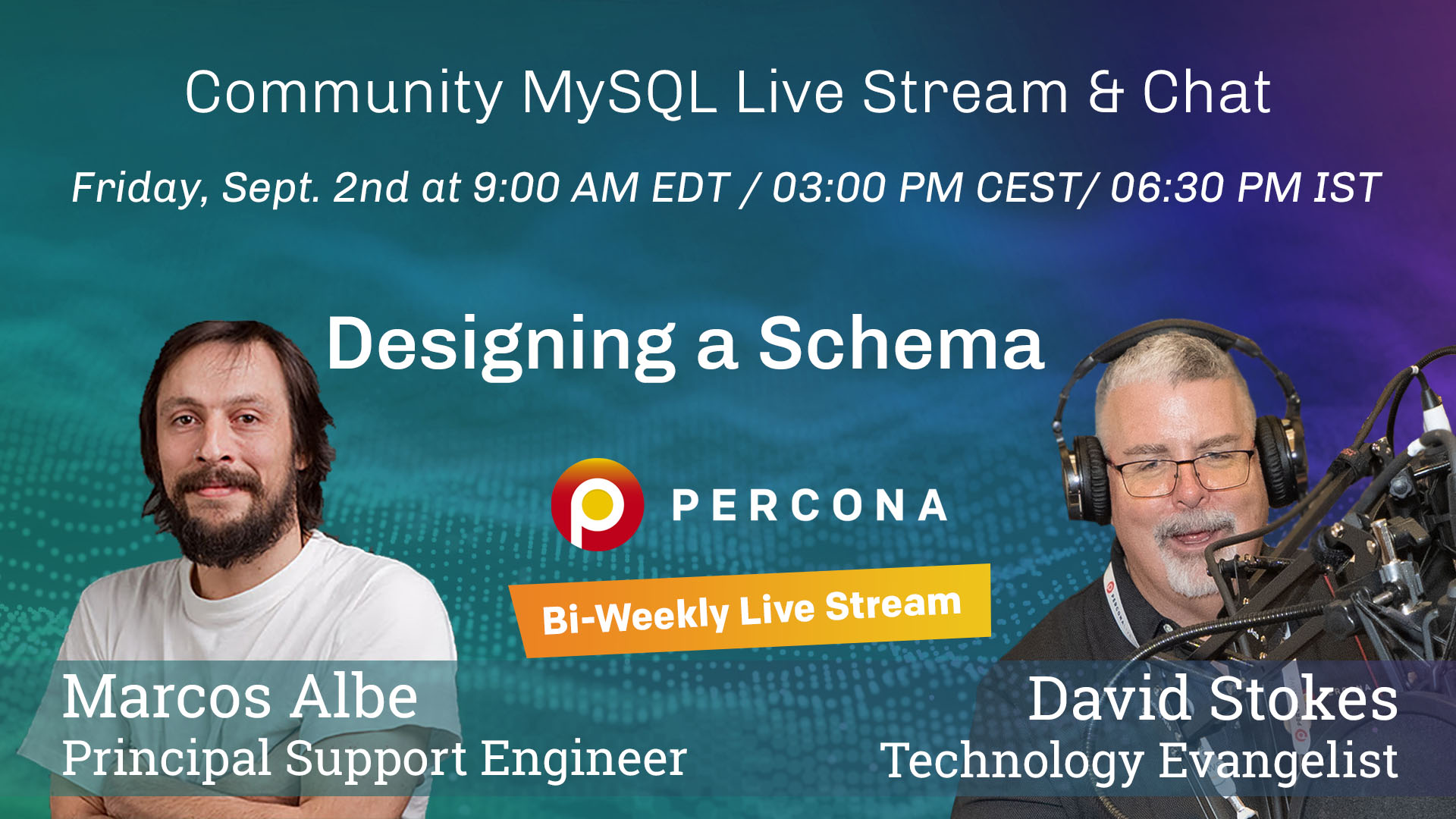

Percona Community Live Stream for MySQL will focus on Designing a Schema. This is a part of our bi-weekly meetup to develop open-source database skills. Come up with your questions and get an answer straight forward to all your questions.

Video

Transcript

Dave Stokes:

Good Morning, good evening, good afternoon, good whatever it is, wherever you are. I’m Dave Stokes from Percona and with me is Marcos Albe. Today we’re talking MySQL schemas. I thought a couple years ago, everything was supposed to be you just open a JSON column and pour everything in there. Do we still need relations?

Marcos Albe:

No, don’t do that. Don’t do that, please. And, you know, let me tell you like, you started with that, let me tell you something, since you kick-started that, that is the biggest lie in databases in the last decade, I believe, you know, schema, less databases are a huge, huge lie, there is no such thing as schema lists. You know, on the world, on the opposite side, when you are using something like MongoDB, you have to more carefully design your schemas. Because the cost of doing lookups, the equivalent of doing a join in relational databases on MongoDB is very high, very high. So much so that Tenjin, Mongo MongoDB, Inc, will still recommend you to use embedded documents. But that always makes things cost more. Right. So I don’t think that’s, that’s very good advice.

Dave Stokes:

Okay. Cool. So the number two lie in databases for the past decade is it’s going to be cheaper replacing the cloud.

Marcos Albe:

Oh, yeah. That’s even a bigger one.

Dave Stokes:

For you, and let you expound on schemas.

Marcos Albe:

Yes, sir. Let me please share my screen. Let me find how do I share screen of this thing? Oh, yes. So here we are. Share. And let me put this on slideshow? Oh, can you see my screen? So welcome, everybody, we’re going to be talking about one of the fundamental things in databases, which is how to properly design your schema, like Dave was saying, you know, the promise of schema-less world has been left behind. And now everybody admits that, you know, schemas are necessary. And that, you know, you might save yourself from doing alter table. But still, you have to carefully design your schema, to have a good foundation to build reasonable queries. So, database is a system to store data and later be able to retrieve it and produce some form of intelligent reporting or gathering to perform other actions. So be able to do those queries later, efficiently. It’s important. And for that, we need to properly design schema. So the first thing you will learn in college if you do a databases course, you know, one of the first things and so just sank is asking me if I am sharing the screen. Can you confirm you can see, David? I think I am. I see you’re on LinkedIn. I’m not sure if that will prevent you from seeing it. I am waiting for David to tell me. I’m sure in YouTube, you should be able to see it. Am I right, David? I think I lost David.

Dave Stokes:

Yes, it’s on Twitch and it’s on YouTube.

Marcos Albe:

Okay, could you share the YouTube link on the chat? So hey, hello did so. So you know, the first thing you have to learn is how to normalize your tables. And this is done by following the so-called normal forms. This is a series of rules that help you that you can easily apply or that you can apply to your schema design that was created by James cod. Back in the 70s when he came up with a relational database, this idea, and it’s a set of six rules. So 123 Boys called rule and five six. Normally we only do 123. And perhaps if you have like a very weird situation, you might apply Boyce Codd, we’re only going to be looking at the first three. And, again, these so that you when you’re designing and schema, you’re creating your application, right, it’s the time of the application inception. So, you will gather the description, you will gather the requirements, the use cases, and you will extract all the data you want like you will base it on that know the information you want to serve on the database. And you will start putting that into so-called entities or relations and with the relations, you will start, then normalizing them. And here we go. So normal forms each, the first normal form, each column of a table must have a single value. So no multivalued columns, each column, which contains a set of values, or nested records, you know, like a JSON, those are not allowed. So let’s see a quick example of how this will be this will look in real world. very silly example, I have my table. And I have, oops, sorry, I have my table, my ID for this row, I have a name, and have phone numbers. And in this case, I have two for groups. Again, I have two phone numbers. So 5558884, they have only one. And for Peter, again, I have two phone numbers. So how could I look up for a single phone number, or how could I know if this space belongs to the phone number, or if this space is actually just separator with the, you know, extension for that guy. So the right thing to do is to split this into a user’s table, and phones table where you can have, you know, just my ID and the Name. And then on the other table, I will have an ID for this entry. And the user ID, which refers to this table and the phone number. And then I have for the same user, I have another phone number, then for Dave, I have his phone number here. And then for Peter, I have one and two entries. So that way, we can address the phone numbers individually. And this is probably one of the most important things you could do for your database design. Don’t embed, you know, the multiple values in a single column that’s against good practices, and it will make your life difficult down the road. Another normal form every column that is not part of the primary key shouldn’t be able to be infected from a smaller part of the primary key okay? This is more easily shown than explaining. So, let me try this I have my table you know project assignments and I have the project assignment has an employee and a project to which the employee is assigned. But in this case, I have the employee name here and have the project name here. And I could infer the employee name from the employee ID and I could actually infer the project name from the project ID. So, in cases like this, what you must do is actually never have these columns name and project here, you will have your own table with your employees you know and you will have your own table with your projects. And then you will have a single table with a composite primary key where employee ID and project ID combined are the primary key and then you will have any other data for that assignment for example, put it in this case like I was assigned 124 hours to do something. So again, did they call it concept is I was able to infer employee name from the project ID, so I don’t want to have it again here, I will keep it in a table. And if I ever need all this data together, I can use the relationships between employee id and id and project id and id in the project table and put them together using a join. By the way, this is what you know, an embedded document, like in MongoDB, it will look like this, it will have everything in a single hit, right? Like everything will be in a single document like. And again, if you want it to later, let’s say, update my name, you know, why is this important, right? Like? It’s important because if I want to update my name later, I will have to look up every row in every table, where I have put my name, versus being able to update it only in one table. So if I refer to employees via their employee ID, when I want to update something about employer-employee, I will do so in a single place. And instead of having to go to each table and each row where that about such employee will have a period. So that is the goal is to not duplicate data, and to have it in a single place to avoid anomalies to avoid introducing, you know, your application will later be at the risk of forgetting to update one of the rows or to forget to update one of the tables. So to avoid such possibility, the right way is to apply the second normal form. And here is another one, which is a bit more convoluted to say, but it’s not so hard to look at when we see the example. So let’s get into it. Third Normal Form, there shouldn’t be a non-prime attribute that depends on Transitively from the primary key. That’s a lot of words. And it, it kind of hard, it’s the kind of things that makes people not want to do databases when they are in college, I guess. So, here is again, we have you know, some books, a Books table, that is very badly decided, we will see in a second. I wrote a bit about how to barbecue, Dave wrote Hitchhiker’s Guide. And Peter wrote the MySQL High Performance book. So, you know, I have title and format as my primary key, you know, because I could have my How to barbecue book in hardcover and paperback. So to uniquely identify, I resorted to creating a composite key here. Right away, you can tell Marcos, this is not complying to the second normal form, because I should be able to infer the price from the format. So that’s not in second normal form. But further, the title is tied to the outer, and the outer country is dive to the outer. So the outer country is an indirect dependency to the title. And that’s the third normal form what the third normal form wants to avoid, he doesn’t want you to have data about this guy on this table. Because we could identify this guy via the title. And then later, we could identify the country via the author. So the right way to do this is first of all, apply the second normal form and make title format, a primary key in a secondary table in a separate table. And here we will have the prices. Here we will have the books and the books appear only ones with the altar, only ones and the metadata about the book. Because the price is not metadata about the book, it’s about the format for that book. So second normal form. And because that’s, this was primary key right here, you can see, it’s under underlining, it has another line, that in schema design when you’re designing schemas, you will normally see that people underlines something that is your primary key in the table, so I move it out the price, I kept my title and outer, the outer is not a primary key, it’s just an attribute of the book. And then I have a table with our authors and the country. And now this table complies to both the second normal form, and the third normal form. And, again, while this might seem going too far into breaking down things into smaller tables, and while it certainly will imply a bit more work, when you’re doing your joints, it will really make maintenance of the data itself cheaper. So you will not have to look for the outer country in every row and updated it everywhere, which is going to be super costly, you will only update it here, which is simpler, centralized, and less costly in terms of actual work done with performance, right? So this, these three rules, you know, are the bare minimum you want to apply. So these are literally, you know, don’t go on to writing your queries before you get the third normal form, apply it to your tables. So I’m not like if you have questions, please, you know, share on the chat. I know this is a bit confusing to say the least, and that it takes practice. But it’s so important that it’s hard to overstate it. So please take your time. There’s a plethora of material on the internet. I hope my explanation. It’s somewhat clear, but please ask away if you feel like referential integrity, so referential integrity, it’s Well, first of all, let’s say what are references, right, like when we were looking here, we said, okay, I can have my employee ID, and refer to it on the Employees table, and I can have my project ID and refer to it on my projects table. And then I don’t need to keep my project name and everything else in the project assignments table. What if I insert something in the project assignments table with a project ID that doesn’t exist? Right? Or what if I like what if I tried to now delete Project 99. And I forget to delete the entries in project assignments that were using Project 99. So that is the subject of referential integrity, making sure that when we have a reference between tables or so-called foreign key, so the project ID here is a foreign key to the projects.id column.

When I have those, I want to make sure that they are valid, that they didn’t, that they will not be created, or remove it while their parent doesn’t exist, or while a child is using in these relationships, that the project ID will be the parent table. And the project assignments will be the child table, right? It’s pointing to the parent. So referential integrity is about that I don’t want to insert a child row for a value that doesn’t exist in the parent table. And I don’t want to delete a parent row that still has any chance. So we don’t want to leave orphans. And we don’t want to create orphans. That is like an easier mindset, perhaps. So with that in mind, what does referential integrity helps us so it helps us prevent programming mistakes, you know, again, imagine you are just creating a form and you let the user insert their own project ID instead of offering them At least have an existing project as well. If you don’t validate that the project ID exists before, having a foreign key constraint will help you prevent the mistake, right, like there was a mistake, which was allowing the user to insert without checking the project existed. So, by having the foreign key constraint, you are having the database work for you. So it’s helping you prevent forgotten mistakes. All also in in a way, in a sense, it’s a self-documenting schema relationships, because if I see that my If so, these will be embedded in the data definition language for the table, in your creative, I should have included an example here, I can look at one very quickly in a moment, but, because it will be part of the table definition, I am looking Oh, this table depends on this other table. And you know, even if the table names are not obvious, even if I know anything about the system, I will know those two pieces of data are related. And that they can somewhat logically join it right they can be combined to form a bigger entity. So that is also quite important because it helps us have a more clear understanding of the schema. And also, if you’re using a relatively good model entity relationship, the site diagram program, like a piece of software that helps you design your entity relationship diagram, they will usually be able to read the data definition language. And they will be able to automatically generate the links between the tables. That this doesn’t come without drawbacks. While you were saving yourself from that, during that lookup, while you didn’t need our lookup, before allowing the insert of the project, the database is going to do the lookup for you. And you know, that has time cost like it really has to go to these, look up the page that contains the row you’re referencing, and make sure the row is there. Right. So it’s going to look up through an index. This means that the reference ID rows must be indexed. And they will be so because it should be the primary key of the table. And, but again, that’s adding a yo, and it’s taking more memory. So there is a cost. And also an indirect cost is that there will be more locks inside the database. So

for hearing it, if I try to if I do an update for an image for a project assignment, and then I want to delete the webinar, this project what will happen is that this row is going to be locked if you know, and until I don’t complete my update on the related rows, this row will remain locked. So for the duration of the updates on the related rows, the foreign key in the parent table will remain blocked because I cannot let this row disappear. While there are ongoing operations with related froze. So that’s adding locking, and I’ve seen many times how this will introduce deadlocks, and it will introduce big amount of roadblocks like simple row looks, you know that if you’re updating a million rows, you are now looking at a million rows on this table and a million rows potentially or a have X amount of rows on the parent table. So it can add up quite quickly.

And then it also brings some management issues. For example, the order in which you load the data. So you will have to first load the parent table, and then the child table, because you can not insert into the child table until the rows exist on the parent table. Because if they don’t exist, then they are breaking referential integrity. And that makes things a bit cumbersome to say the least. And if you have like circular dependencies, you know, like, if I have you can actually self reference a table directly, that’s the easiest to explain, like, I can imagine you’re trying to do a nested structure of files and folders, right. So you have your file ID and parent ID and the parent ID must be a folder, but the folder could be inside another folder. And that will have you know, it will reference it will self reference the same table to another ID. When you want to load that table, it’s going to be damn hard, you know, you cannot just insert the rows re-insert the rows linearly. So what we normally do in those cases is to disable foreign keys to allow the table to load without special procedures, because otherwise, you will need to untangle the dependencies and insert top bottom. So you will need to insert all the parents first then all the child’s then all the second level child’s and so on. So to prevent that, what we normally do is just disabled foreign key checking for the duration of data load operations, that is quite common. And it should be totally valid if you already had foreign keys on the source of the data dump, because then the referential integrity, it’s already guaranteed. And there is one more bit I forgot to put on my bullets, but basically, we said you cannot delete from the project’s table if there is a steel project assignment for that project. Well, you can, if we do, if we add a definition of the constraint, we can add a cascading effect. So we can set that on delete cascade, meaning that when I delete on the parent table, it will delete will cascade to the child tables. And it will delete the rows that corresponded to the deleted parent. So if I delete ID 99 on the project’s table, it will delete my row with Project ID equals 99 on the Prospects assignment table. So, again, this is done to guarantee referential integrity, I don’t want to delete without deleting the other one or so, there are two options either delete and cascade or I failed the Delete, and then the application will have to take care of deleting in the child tables. So, this brings quite an issue sometimes. So use with care. And again, you know, it is a very good idea to use it for critical data, right? Like if you have money or items that are you know, your inventory, you know, like okay, inventory, it’s good one. I’m not sure I will do it for a blog system and the comments or you know, for I don’t know, my agenda or whatever right like, I will try to use with care and not over bloated because again, it has a notorious performance impact. So you know, use with care. But do keep in mind, this is the right way to guarantee data consistency among the tables.

Okay, and PBT primary keys, this is also something quite important, and people usually don’t understand very well how important it is. So I’m going to talk about MySQL, like, this webinar series is about MySQL. And within MySQL, you know, like, we could talk about many storage engines, but I’m gonna talk about the one that 99.5% of people care about, which is inaudible. So, in in ODB, primary keys are clustered keys, what does that mean? It means that the primary key will be A B three, a B plus three. And that at the bottom of the cluster, that key off the primary key in the so-called basement nodes or leaf nodes, we will find not only the value of the primary key 50 and 80, and 2030. But we will also find the whole row for the primary keys. So you know, primary key 15, its employee ID, 34, Bob, and then you have 77, Alice, and so on. So, the point is that you don’t have like separate storage for the row like you will have in Postgres, or you will have in Oracle, I guess, the key is part of the B tree. So they are one of the same. And this has some benefits and some drawbacks. The benefit is that when you’re addressing through the primary key, you can immediately find, you already get the row that makes primary key lookups faster. The drawback is, if your row grows very big, you’re gonna have more basement pages. And that can be detrimental to performance. So, you know, it’s kind of hard to decide which model is best. But the important thing is to keep in mind, the keys are clustered keys, and we want to have the rows in some order. In this case, they’re going to be in the natural order of the primary key. So you can see that, for example, employee id is not in order, right? But if I wanted to scan the range of primary keys, I can say, Okay, give me any employee with a primary key between 819 and 50. And I can very quickly, you know, start here and here because it’s all always connected through the bottom notes. And then a secondary key will look like this, you know, for example, if I order if I have a secondary key or names, my B tree will look like this. Alice will Sark. And then to the left, they will have Alex Eric rose, and then it will continue to split the tree to branch out. And at the bottom, I will have the primary keys. So Alice is 18. And, you know, I don’t need the 77 was the employee ID, right? So I don’t want that I want the primary key. So that once I find the primary key, I can go back to the primary key and find the whole row. So, I don’t have pointers to the row on the secondary keys. But they have pointers to the primary key, which is itself the row. So that’s one part about, like, keep your primary skis. So all the story about this is it’s important to keep playing requests tight, and light, right like to make it as lean as possible. And to understand how primary keys can become fat, I took a very common example, which is, should we use universal, unique IDs, or so-called UU IDs, instead of an integer. Many people will love UU IDs because they feel it’s safer. If you have distributed, writes, you know, if I write here, and I write here, and my I used UU ID, I will never have a conflict. That’s true, as long as you’re only ever inserting data. As soon as you do delete and updates, then God knows what’s going to happen. So the certainty that primary keys, using UU ID, I will answer that question in a second, please hold on. So the idea that having UU IDs as the primary key is going to prevent any conflicts, it’s a lie. It’s not true, it’s an illusion. So don’t rely on that. And here’s why. It’s not only not going to save you from the consistency issues, but it’s also going to make your table much, much bigger. In this case, this example we are seeing here, what we have seen here is a map of the age of table pages. So each table on my page has sampled the so-called Ellison the logical sequence number, which is a number that goes from zero to 64 bits, which is many, many, many billions. And we base it on the logical sequence number, I can tell the age of how old a base is not in days or minutes, but relative to the whole sequence number, right? And in this case, you can see on the bottom, you know, my minimum is 38 million, and my maximum is 342 million. So the blue is older, and the purple is newer. So the first 128 pages are the table header. And you know, this is always going to be actively updated, more or less. But you know, there’s some purple in the first few pages. That’s because when you update the table, and you add a new page, or you read or a page gets removed it, you will have to update the file descriptor, which lives around there. So you know, these first 128 pages, are always updated. And they are, again, the table header. But then you can see, right? It’s quite linear. And while yeah, here I have some red, and then a bit of green, you know there was an update that updated some rows here in line 343 384, you know, it must have had an update that that explains why in line 384, I see some red before I see some green on 448. But the point is, you know, it’s growing forward. And if I want to read the last week of data, or the last rows I inserted, if I only work with an active data set, I will only have to fetch pages from the last segment of the table. So it’s not fragmented, right? What I’m what this implies it’s not fragmented. And the ranges of recent rows are easily accessible with few reads, you know, I don’t have to read the whole table to find the rows I updated today.

Which is, you know, a very common pattern in databases. You know, I keep all my orders for the last five years, but actually only deal with orders from the last two or three weeks of sales. And I keep all the information for all the students I have for the past, you know, 20 years. But I only work with the information of the students that are active this year. And so the time element in a database is quite important. And having the rows in having the pages that were last updated, easily accessible in a sequence, it’s going to improve performance. Let me ask them second question, how they hide the details. So yes, a large data modification query, you know, if you do an update of all your rows, well, it depends on what the update is doing. Like, I can think of like, let’s say, adding, I have a blob column, and I’m adding or removing a lot of data, then probably an optimization to rebuild the table will be in order. If I am only updating one column, that it’s a secondary, that it also belongs to a secondary key, then usually you don’t, you will need to update it, let me tell you, this is always an InnoDB page. It’s 16 kilobytes. And as long as you’re not changing the size of the row, so that it will, you know, stop fitting inside the page, or such that it will now shrink so much that it will leave a lot of the page empty, then it’s okay to not rebuild the table, what it’s important is to rebuild the table, after you do an update, that will drastically change the size of the rows. Because that will most certainly lead to fragmentation. So it’s not mandatory to an optimization when you do an update. If you’re updating a date, or you are updating an ID, and you’re not changing the size of those, then it’s fine. If you are updating a string, and you are changing the string from 10 bytes to, you know, 500 bytes, then probably it’s going to end up creating a lot of fragmentation, so you will want that. And this h map is created with a tow tool called InnoDB. Ruby. It’s from Jeremy Cole, who has a which is a longtime contributor to the MySQL ecosystem. I will get you the link in a second. If you don’t mind me looking for just one second, please. During roll, the beautiful be me. Okay, here it is. And how do I place this on a chat? I will give it to my friend David, who will then share it with you because I have no clue where the chat is here. All right. All right. All right. So oh, I private chat are all private, I want to share with everybody I’m a very sharing person. Okay, I cannot find it. But my friend David is going to share it with you in a moment. So again, you can see that for this table, I took less than 700 pages. And it’s all neat, neat, and tightly packed. That’s because the outer increments are, you know, an outer increment ID it’s monotonically increasing. So it’s always going to be filling up pages on the right side of the tree. And the way B trees are rebalanced it is by pulling up one of these nodes later, right like once, like if the right side of the tree grows and grows and grows, you’re going to have an unbalanced tree. So to rebalance, what you do is you pull up one of the nodes on the right to become the top, the one on the top. And that’s the way you normally will rebalance the key and that’s why you want to always insert linearly on the right side. I see. Okay, now I thought there was another question, but there were no more questions. So again, monotonically increasing keys will result in something like this. You could have ordered UU IDs. So you IDs do have a date component, you use the definition, you UID I think is the second segment or the app, who cares. But one of the parts of the UID is a timestamp. So that’s monotonically increasing, that’s perfect. Now, it will still have 16 bytes. And it literally took almost double the pages in my table. So it’s neatly ordered, but it’s using much more pages. And remember one more thing, that is one more thing, bigger. Primary Key means bigger secondary keys, because, remember, the secondary keys have the primary key embedded in them. So if you have a secondary key that is four bytes per row, and now you’re adding like, you know, 16 bytes to that secondary key, instead of having a secondary key that will be eight bytes, you know, for the secondary key. And for the primary key, you don’t have a secondary key that is 20 bytes. So, as you add into the many millions of rows, these kinds of small differences will start to add up. And they can make a major impact. So yeah, you can have you UID that it’s ordered, and it will be a nicely neatly and tight packet. But it will be heavier, it will still have larger footprints on disk and in memory. And then, this is the result of using UID. Without, you know, ordering, like without putting the date first. Because this one is by chopping down the UID and putting the date first such that it will be ordered by time in a monotonically increasing way. This is the row UID. And you can see it got scattered all over the place, right? Like every page, it’s permanently updated. So whenever I want to pull one of my baits like imagine I inserted 20 rows today, to fetch those 20 rows, I only have to fetch the last page. But here, I’m gonna have to fetch anything between one and 20 pages because the rows ended up all over the place. That the meaning of this. The purple is telling me, the rows being inserted and updated are all over the place, I have to access all these. And each of these, it’s representing you’re like, I have to go to this to fetch the page. And I have to go to this to write the page. And it’s not the same page. So I’m gonna have to do double the work or XR x more amount of work because I have to touch every page. And this is the worst thing. One of the worst things you can do for your database is like it’s causing fragmentation. Yes, it’s very bad for the buffer pool. And it’s very bad for your disk. It’s literally looks, it’s taking 2000. Actually, it’s taking more pages, it doesn’t even fit in here.

But you know, it’s like my, my original database is taking 1234567, let’s say eight rows of pages, right, like less than 400 pages, something like that, or 500 pages. This one is taking 1-234-567-8910 1214 rows, you know, so let’s say 800 pages. This is taking 1000s Again, doesn’t even fit in on the screen. So it’s one of the most detrimental things you can do for your database is using non-ordered UU ID and If you can’t avoid it, just don’t use UU IDs. Again, it’s not saving you from anything. Because, again, it will only help you avoid conflicts. If you only ever do inserts on the table, as soon as you do a Delete on the table, you could be deleting rows from anywhere. And you know, because again, this UU ID, you know, Francie comes from people that want to have multi-master, because they have one node in when Osiris and OneNote in Montevideo, or, you know, they have one node in New York and another in San Francisco. And they want to be able to write locally to both. And so on way to say, okay, my primary keys are not going to conflict, and they will be able to identify the rows uniquely, is by using UU ID. But now, let’s say someone goes ahead and does DELETE FROM users WHERE age less than 13, you know, because we want to keep our kids safe. And then on the other side, someone says, update, you know, I don’t know, update allowance equal to something where city or where school name equals whatever. And you know, like, you are going to be touching the same range of rows on both sides, because you’re not always accessing rows via the primary key. We many times will access rows via secondary keys. And as soon as you do that, you can have overlapping ranges on the tooth on the two sides. And then go, there goes, your consistency, consistency is no longer true. So using UU IDs is not going to do anything for your consistency. So don’t use them just don’t do it. And yeah, a good guy to my sequel UID functions. I will try to show you a neat trick in a moment. But let’s finish this. And what about using unique keys as your primary key? Yeah, valid, that is perfectly fine. And as a matter of fact, in ODB will do it. So let me explain this. In ODB, we said a moment ago, it uses clustered key. So it basically cannot live without a primary key. So if you don’t have a primary key, in ODB, we’ll add one for you. And it’s going to be 36 bytes to truly the whole world. And that’s the worst thing you can do is not add a primary key, that’s probably the only thing worse than using a UU ID key is not using a key because the B is going to choose one for you. It’s going to be a huge one is not monotonically increasing, and you have no control on it. So in order to be, you know, because it so desperately needs a primary key to actually store the row somewhere. If you don’t define a primary key, but you define a unique key that has no new level columns, it will pick it up as the primary key itself. And it’s totally valid, it’s perfectly fine. You don’t need to add extra columns to be the primary key. Make sure it’s not huge, it cannot have nullable columns. And again, make the leftmost column and I didn’t put that here, I should have make the leftmost column monotonically increasing. Because again, otherwise, you’re going to end up with the same problems than UU IDs.

So yeah, unit keys are perfectly good. Just make sure they are not 300 bytes wide, because it’s gonna make your secondary Skeets your secondary keys be very large. So you know, not a bad idea. It’s perfectly valid. And and again, InnoDB is gonna do it for you if you don’t define a primary key and you do define a unique key, but you know, might be less than ideal in some situations. And then very quickly, because we’re almost at the top of the hour. We’re going to take a look at datatypes. Again, data types are the kind of They defined data types will define a few things, basically, what I have listed here, and which is the crux of the topic. First of all, it will tell, okay, is this a numeric datatype? Is this a date is this a string, spatial is for geo information systems. So, you know, geometry line point, etc. So they allow us to write queries to locate things on a map. Very important if you’re into mapping stuff. And then JSON, which is a special type of string. So, firstly, we have those types. And then we have position and status requirements. So, I can have numeric type, with more or less precision, or I can have daytime with more or less precision string will only have how much of a string, how big of a string I can store spatial, doesn’t have any kind of precision and JSON either. And then storage requirements. And this is the most important bit, you know, that every data type has different amount of bytes on disk. And if I store the number 123, on an integer, I will always use four bytes. Even when I could have started that on tiny int, that is only one byte. And this is really again, you know, when you’re talking about many millions and billions of rows, then this becomes important. And again, your data type is not only going to be on the road, it’s going to be on the secondary keys. And it’s also will need to be referenced probably in other tables. So if I start using, I will believe this is the most common mistake people do. They go for begin, you know, they use big int in their primary keys, because you know, I will ever run out of begins. And you’re absolutely right like big enough is enough to count the ants on the earth, like, you know, like should be enough to count the ants on Earth. And I’m sure most people doesn’t have answers customers. So there is no point in having begin for your customer ID and, and then you will have to reference your customer ID in 10 other tables. So you’re always wasting bytes, not only in one table, you’re wasting bytes everywhere you’ve referenced the table. And again, it’s not going to break things, it’s just going to make your database perform less optimally, is going to cost you more on storage, and it’s gonna cost you much more on memory. So choosing the right data types, it’s important, and I realized, you know, I will admit I did this is like it’s a bit of a rush. And I stupidly forgot to add the example range. But for example, tiny int can go between minus 127 to 127. Or you could do an unsigned, tiny int, which will go between zero and 255.

Small int goes between minus 30 206 3200 767 to 32,768. And if you do unsign it that it goes between zero and 65,000 Blah, Blah mediavine goes between maybe minus 8 million to plus eight millions, or if you do unsign it it is between zero and 16 Millions. So using the appropriate data type is saving you everything you do, it’s done with bytes, you know like so, if you’re saving yourself a few bytes, you’re saving yourself, those few bytes from every single operation you do reading from disk, allocating memory comparing, you know, compressing everything you do, everything you do is going to be done with this data. So So, as you shrink your data types, those operations are going to become more efficient, and your database is going to run faster. So this is really so important, so important that it’s, you know, hard to understate, and people tend to abuse data types. And just use very big ones, you know, they use var Char 255, for everything. And they use int, and begin for everything. And you know, it’s a blanket. And I empathize. It takes time and effort to identify proper types. But it’s worth the effort, especially if you’re just starting. And you can define the domain of your database from scratch, you know, do take the time, and you will enjoy better performance in the long term. And I don’t have too much more to add to that. So perhaps anybody else has some questions, and we can stay a few minutes. Otherwise, that was my humble presentation about the schema design.

Dave Stokes:

Let me get off mute. Something to reiterate, if you use big ant for your primary key, and that fields are indexed, you have two big issues for every record, and then you add a secondary index or two. So suddenly, you have three or four big ones out there, sucking up memory, sucking up your space. And you do that a few billion times, and things get messy.

Marcos Albe:

Yeah, they do. They think like, again, where you were able to fit to because, you know, an int is four bytes. So where I was able to fit two primary key values, now I’m only able to fit one. And she said it’s costly.

Dave Stokes:

As someone who did a lot of his early career writing assembly language programs, that always seemed rather upsetting to me. But oh, well, let’s see. Yes. Question from

Marcos Albe:

Yeah. Juca has a very interesting comment. So let me tell you something, we fully know it happens. But the limit for unsigned int, four bytes is 4 billion, something, it’s a big number 4 billion-plus. So there are two ways that this can happen. First of all, you probably didn’t use unsigned. So unless you need to use minus like negative values, make sure you always use sign it, because you perhaps you still have half the range to use, and you just hit the limit at half the range. And the other one is. But there are two more ways that things can go wrong here. One is, if you use auto-increment an hour to increment offset with somewhat large values, you know, you’re going to be leaving gaps in the range everywhere. So those outer incrementing comments out increment offsets, they were also meant to do multi-master replication to avoid conflicts, like, oh, I will write all my 1369 12 here, and I will write all my two four, you know, like, crazy stuff, like really crazy stuff. And what happens is, not only is not gonna save you from conflicts later down the road, like we said, for UU IDs, the same is true for staggered primary keys into notes, like it’s not going to save you from like, if you write all your alts here and all your evens here, you will stand up and end up with conflicts when you do updates or deletes via a secondary key. You are going to be leaving gaps, you will probably be leaving big gaps in the in the range, so you’re depleting your range. So where you will be able to fit for being a rose, perhaps you only fit 500 million or 1 billion and the rest is pure gaps. So don’t use out incrementing from a slash-out increment offset. And the other problem could be with the InnoDB auto Inc lock type. So to avoid locking the auto-increment, you know the beat does some complex trickery to allow that some complex trickery to allow you to do multi row inserts and big insert select queries without having to log it but that will reserve chunks of the range and then if something gets rolled back or the insert fails or whatever the Range might go and use it. So, you know to be outgoing Glock equals two could be causing your integral range to be depleted faster. And I see do get posted another one. Usually the problem is not changing primary from integer to unsigned int. Oh, yeah, changing related Java code. You’re totally gonna have to deal with that. But that’s a programmer’s life. Not that I’m just kidding. But yeah, I empathize that it will indeed require a UL Type on the so sign it and large I think it is. I think it sounds fine at large. At least that what it’s in C, I cannot recall what it’s in Java. I’m very poor at Java. Datatype and type languages. They go hand in hand. And they can be messy. And yep, it will. Indeed. That’s why all rooms are both a nightmare. And a bless. Because, you know, it’s easy to, to actually save yourself from that.

Dave Stokes: Yeah. And programmers, make sure you check those return codes, you might assume it’s something okay. But it’s fun to come back and have the system telling you no, we’re out of space, or no, there’s a conflict, and have that ignored and come back two weeks later going well, where’s all this customer data?

Marcos Albe:

Yeah, as a matter of fact, we one of the checks we do, for example, in managed services, and that we’re implementing for PMM is checking your integrity, your ranges for the primary keys, like Are you about to run out of primary key range. So we have that in as part of our monitoring? Because we know it happens. And it’s, like, devastating. So yeah, it’s a very bad situation to be in. So monitoring that is important. It’s part of the monitoring deal.

Dave Stokes: As an analog, the Postgres, we’re all using 32 bit integers. And their primary keys are 32 bit integers. And there are actually cases where if you’re not doing your table maintenance, you run out of 32 bit integers. And suddenly, nothing can go in the table. And one of the things can happen is, in rare cases, it starts overwriting numbers, and all your old data is gone. And your new data is there. But it’s not really gone. You can still get to it. But not really you need a a ninja level coder to be able to get to the stuff. So that’s one thing that MySQL is clustered index does do for you that you don’t have to worry about.

Marcos Albe:

Yep, the data will be there at least.

Dave Stokes:

So we had a quick question about the flame graphs that you were displaying how those

Marcos Albe:

Again, those were, I gave you the link in Slack in our slack because I couldn’t put it in the chat. I cannot find public chat. I can only find the private chat. So hold on, folks. I will get that out there.

Dave Stokes:

That Jeremy Cole’s Ruby thing. Okay.

Marcos Albe:

Yeah, this is from Jeremy Cole. Jeremy Cole. It’s a dear friend of Percona. And I’m a great contributor to the MySQL ecosystem. And he, as part of his study, into InnoDB, he wrote this tool, along with a series of diagrams that I consult periodically, that they lay out, they showed the layout of the InnoDB internals, for the base, the row, the B trees and everything else, really great material. Like you can check in his GitHub repo. There will be one that is called into the beat diagrams. I think let me see children.

Dave Stokes:

Oh, by the way, ambitious Ruby project I’ve ever seen.

Marcos Albe:

Yeah, the other one is called InnoDB diagrams. And it’s literally all the diagrams that describe this storage format and layout for indies super useful. Yeah, well, again, you know, that’s where I will tell you don’t let Galera eat your out increments. Disable Galera like, first of all, always write to a single node in Galera. Like, always write to a single node in the letter, don’t do multi-master in Galera, that’s guaranteed to be free of conflicts, but in a sense that it will never store a row with a conflict, but it’s going to roll back one of the transactions, and it’s gonna force you to write that transaction again. And that’s going to cost you performance. And on top of that, then you’re going to have to let go later. Do your out increment. Thinking I mean think, don’t do it, just write to a single node and disable that is a WS rep auto increment control.

Dave Stokes: Or let me check off the same information for InnoDB cluster, right? Run single primary not multiple primary right to one, using like 4467, or whatever the port is and let it the more you try to outsmart the software, the more you’re going to set yourself on fire.

Marcos Albe:

So I just asked A to share the name of the variable. It’s ws rep, auto increment control, that will tell Galera if it should, you know, deal with the auto increment out increment offset? My take my personal advice, you know, is no, don’t let Galera do that, you know, just use sequential 1234567. And write to a single node always. And if needed, use proxysql in front so that it can send the rights to the designated writer. And when that writer fails, it will pick another writer. But don’t, don’t use ws rip out increment control on disable it. And make sure out increment increment equals one out increment offset equals one, and you will have longer lasting integral ranges.

Dave Stokes:

Well, thank you very much, Marcos. And thanks to our audience. If you do have questions or suggestions or stuff you want us to cover, please let us know. And with that, thank you all very much and have a great day.

Marcos Albe:

Have a good day, everybody. Thanks for attending. Bye-bye ∎