Our PostgreSQL cluster is running, and the demo app is generating traffic — but we have no visibility into the health of the Kubernetes cluster, services, or applications.

What happens when disk space runs out? What if the database is under heavy load and needs scaling? What if errors are buried in application logs? How busy are the network and storage layers? What’s the actual cost of the infrastructure?

This is where Coroot comes in.

Coroot is an open-source observability platform that provides dashboards for profiling, logs, service maps, and resource usage — helping you track system health and diagnose issues quickly.

We’ll deploy it using Helm via ArgoCD, continuing with our GitOps workflow.

This is Part 4 in our series. Previously, we:

Set up ArgoCD and a GitHub repository for declarative manifests (Part 1)

Installed a PostgreSQL cluster using Percona Operator

Deployed a demo application to simulate traffic and interact with the database

All infrastructure is defined declaratively and deployed from the GitHub repository, following GitOps practices.

So far, we’ve explored cluster scaling, user management, and dynamic configuration — and now it’s time for observability.

We’ll install Coroot by following the official documentation for Kubernetes.

Steps ahead:

Install the Coroot Operator

Install the Coroot Community Edition

Let’s get started.

Project Structure

We already have a postgres/ directory for PostgreSQL manifests and an apps/ directory for ArgoCD applications.

We’ll preserve this layout and add a new coroot/ folder for clarity. You can use a different structure if preferred.

Create Manifest for Installing the Coroot Operator

The documentation recommends installing via Helm.

Since we use ArgoCD, we’ll create a manifest that installs via Helm.

Create file: coroot/operator.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: coroot-operator

namespace: argocd

spec:

project: default

source:

repoURL: https://coroot.github.io/helm-charts

chart: coroot-operator

targetRevision: 0.4.2

destination:

server: https://kubernetes.default.svc

namespace: coroot

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=trueNote: I’m using version 0.4.2, which was current at the time of writing.

To check available versions, use this GitHub link or Helm CLI:

helm repo add coroot https://coroot.github.io/helm-charts

helm repo update

helm search repo coroot-operator --versionsCreate Manifest for Installing Coroot Community Edition

Create file: coroot/coroot.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: coroot

namespace: argocd

spec:

project: default

source:

repoURL: https://coroot.github.io/helm-charts

chart: coroot-ce

targetRevision: 0.3.1

helm:

parameters:

- name: clickhouse.shards

value: "2"

- name: clickhouse.replicas

value: "2"

- name: service.type

value: LoadBalancer

destination:

server: https://kubernetes.default.svc

namespace: coroot

syncPolicy:

automated:

prune: true

selfHeal: trueThis chart creates a minimal Coroot Custom Resource.

I’ve added service.type: LoadBalancer to expose a public IP.

If you don’t use LoadBalancer, you’ll need to forward the Coroot port after installation:

kubectl port-forward -n coroot service/coroot-coroot 8080:8080Create ArgoCD Application Manifest

Since we manage our infrastructure via a GitHub repository, we need an ArgoCD Application that tracks changes in the coroot/ folder.

Create file: apps/argocd-coroot.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: coroot-sync-app

namespace: argocd

spec:

project: default

source:

repoURL: https://github.com/dbazhenov/percona-argocd-pg-coroot.git

targetRevision: main

path: coroot

destination:

server: https://kubernetes.default.svc

namespace: coroot

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=trueThis lightweight app will monitor the folder and apply updates automatically if a change is detected (e.g. chart version bump).

Define Chart Installation Order

We have two charts: operator.yaml and coroot.yaml, and the operator must be installed first.

Create coroot/kustomization.yaml to specify resource order:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- operator.yaml

- coroot.yamlPublish Manifests to GitHub

Check which files were changed:

git statusAdd changes:

git add .Verify staged files:

git statusCommit:

git commit -m "Installing Coroot Operator and Coroot with ArgoCD"Push:

git push origin mainApply ArgoCD Application

Deploy the ArgoCD app that installs Coroot from our GitHub repository:

kubectl apply -f apps/argocd-coroot.yaml -n argocdValidate installation and sync:

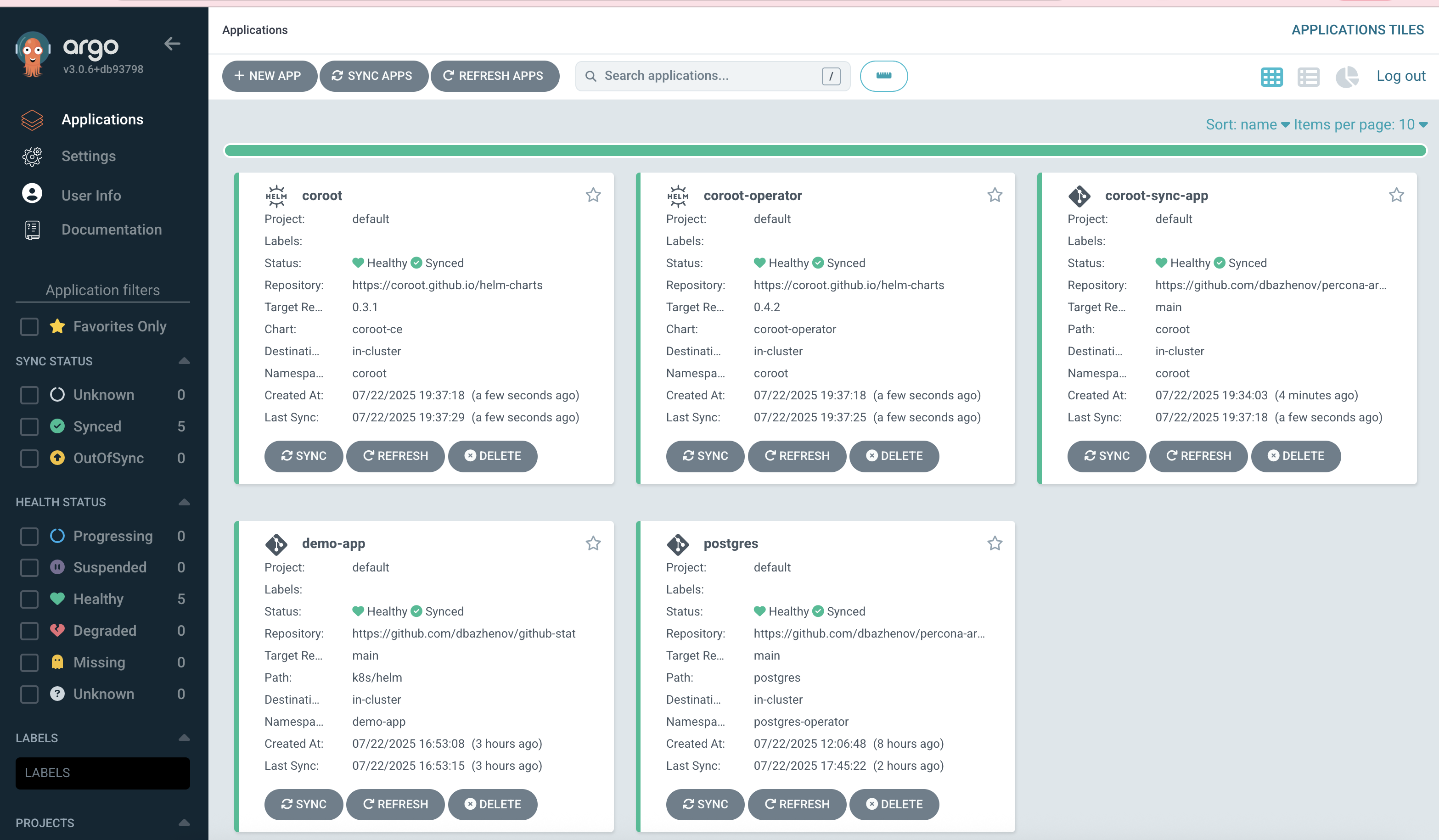

We now see coroot, coroot-operator, and coroot-sync-app deployed.

Access Coroot UI

Since we deployed Coroot using LoadBalancer, retrieve its external IP:

kubectl get svc -n corootOpen EXTERNAL-IP on port 8080.

For example: http://35.202.140.216:8080/

If you didn’t use LoadBalancer, run port-forward:

kubectl port-forward -n coroot service/coroot-coroot 8080:8080Then visit http://localhost:8080

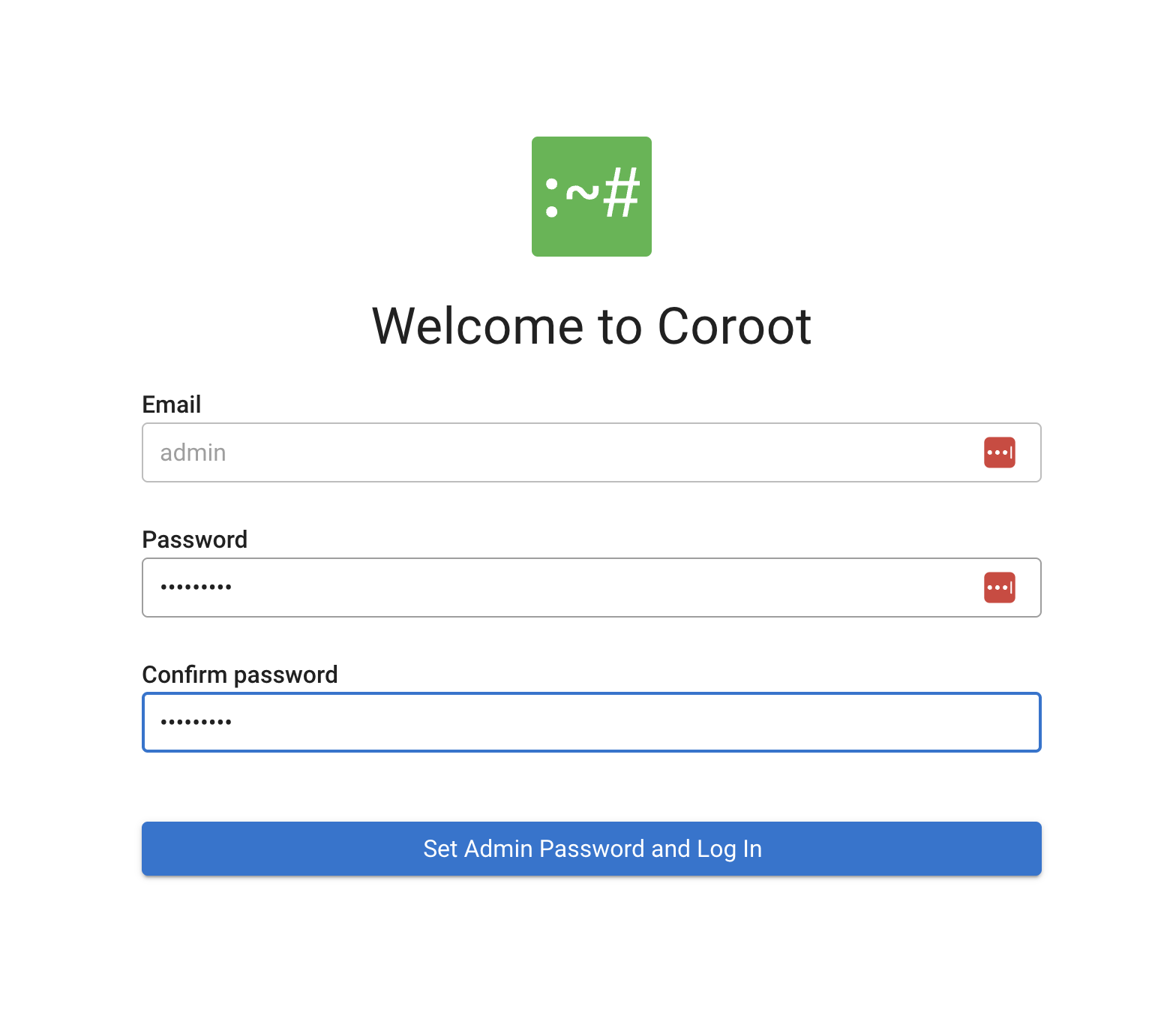

You’ll be prompted to set an admin password on first login.

Exploring Coroot UI

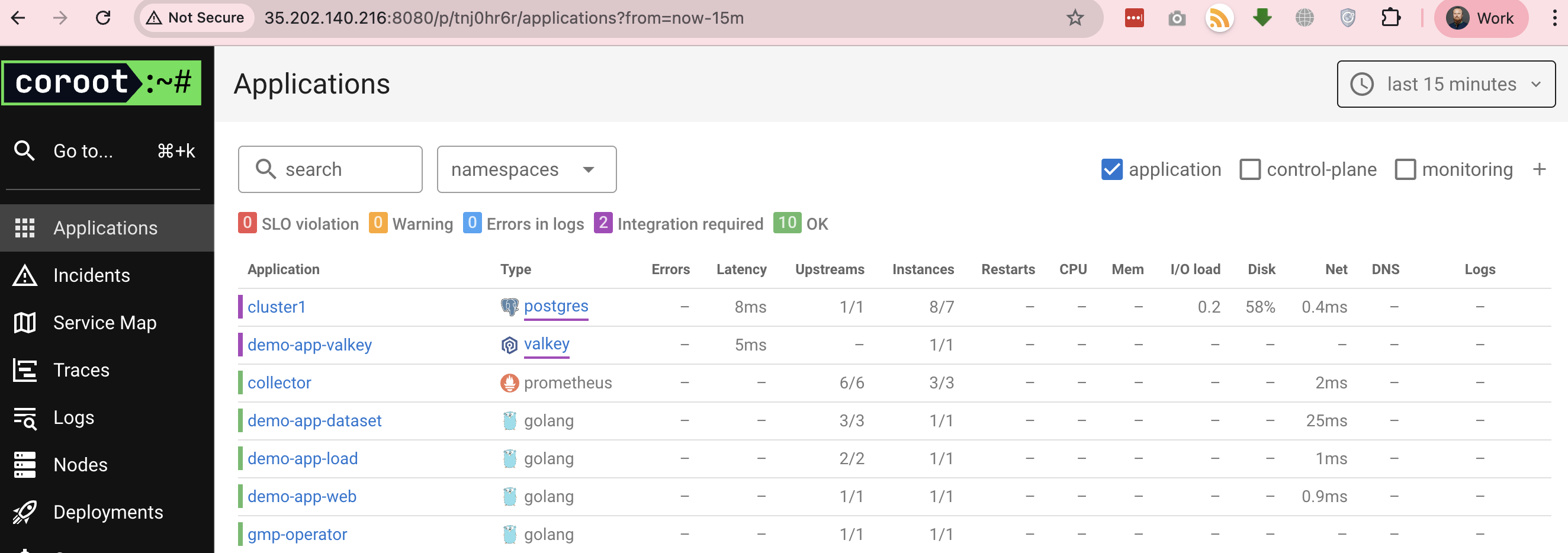

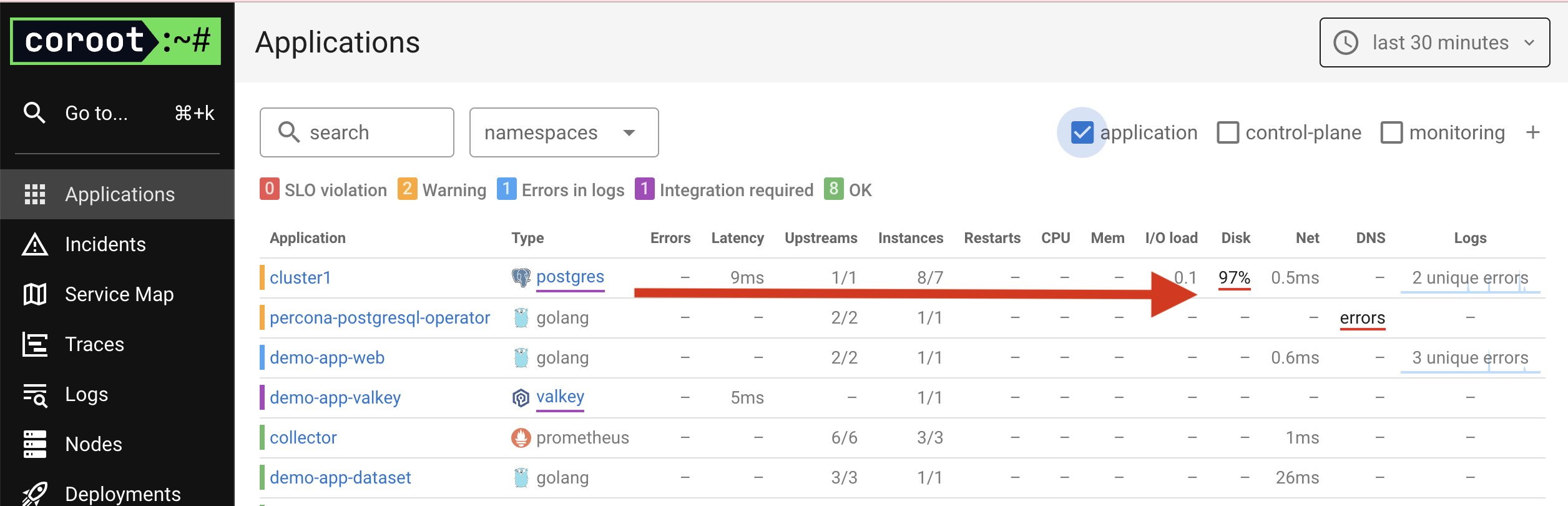

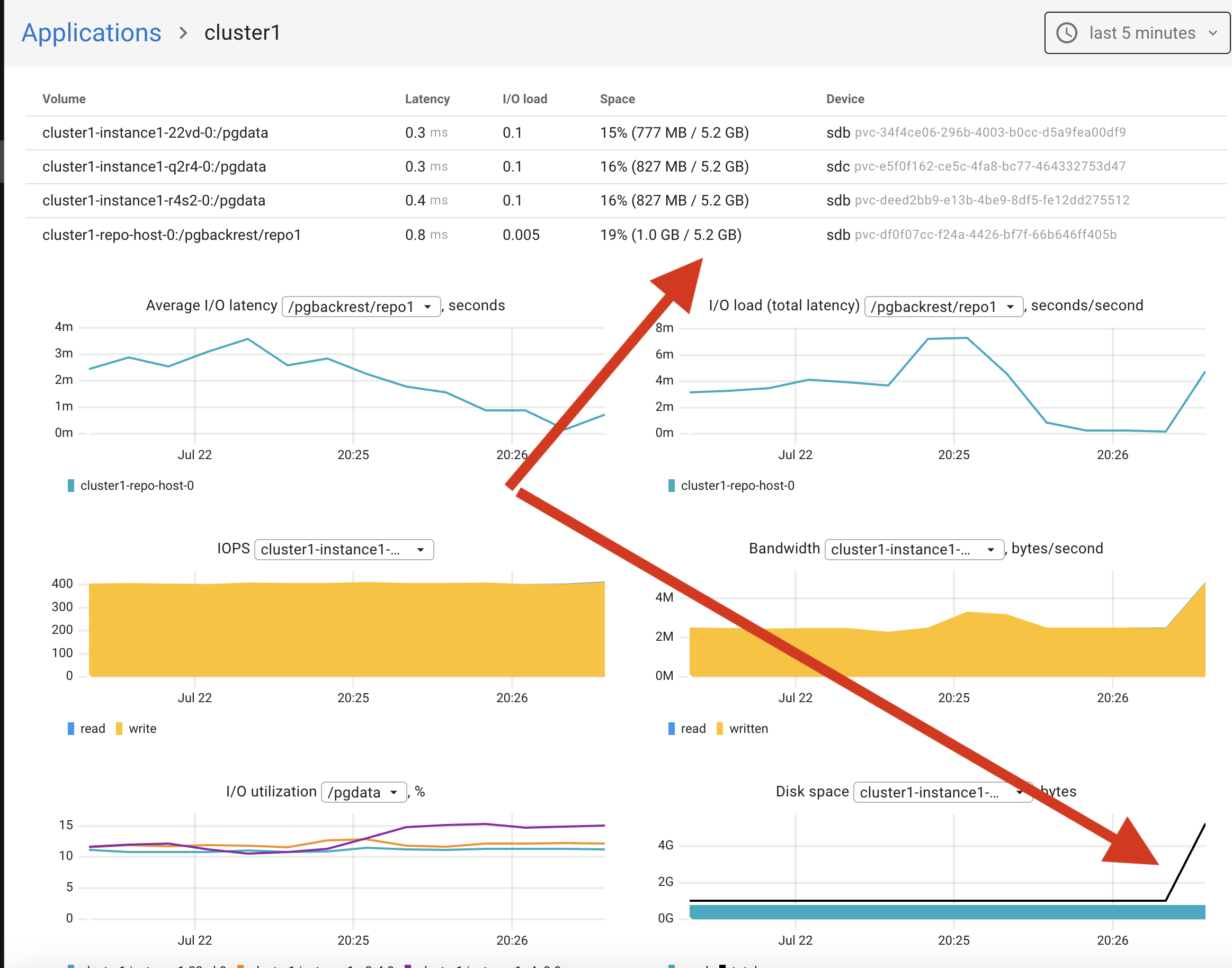

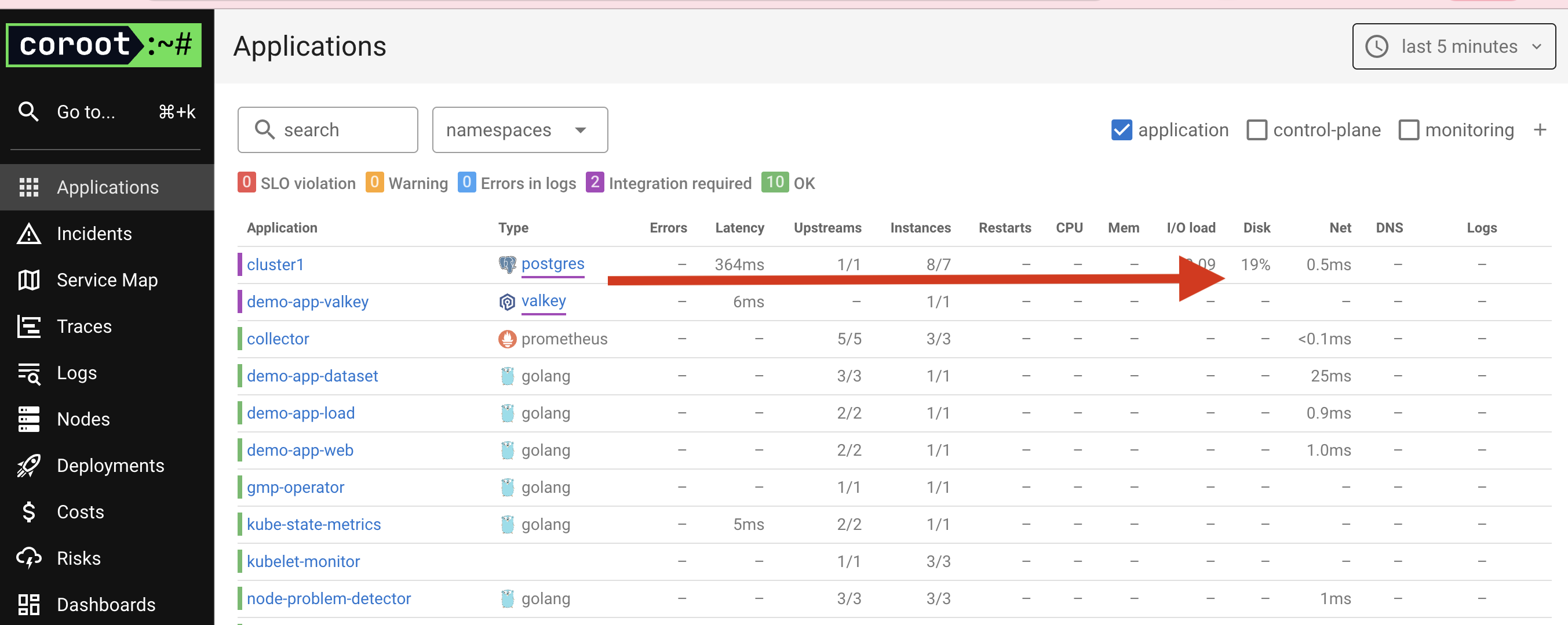

On the home page, we see a list of applications running in the cluster and resource usage.

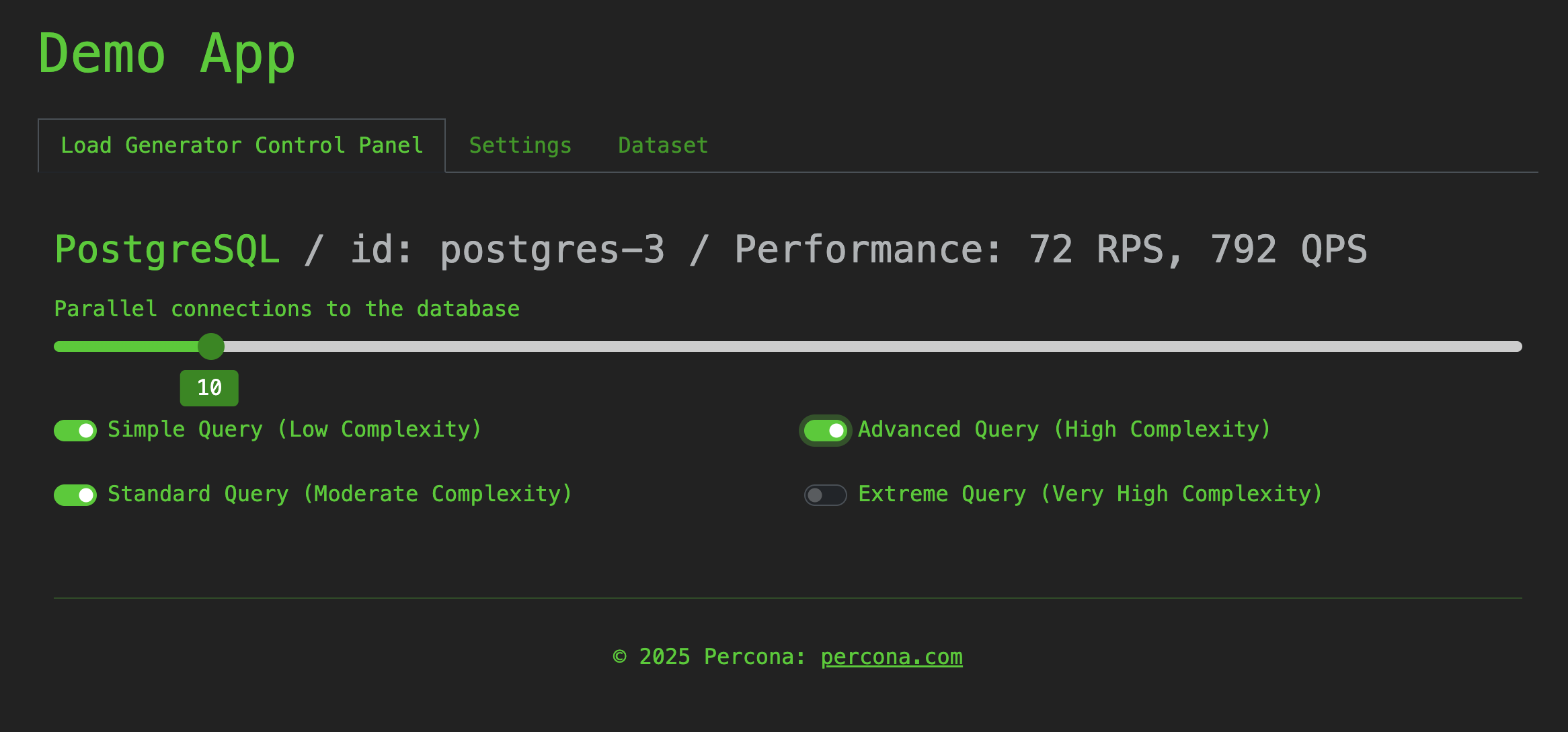

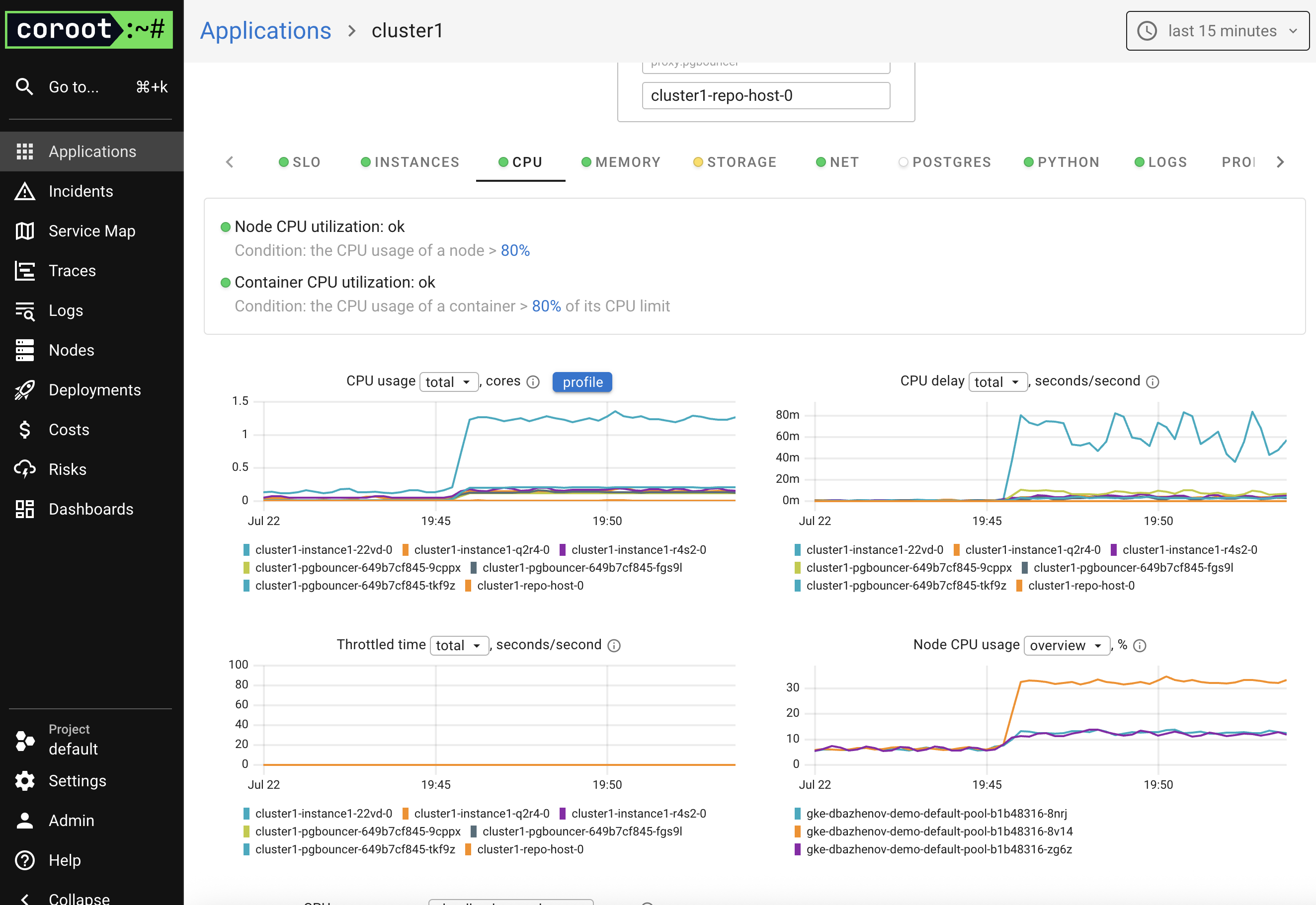

I increased the load on the PostgreSQL cluster using the Demo App to test observability.

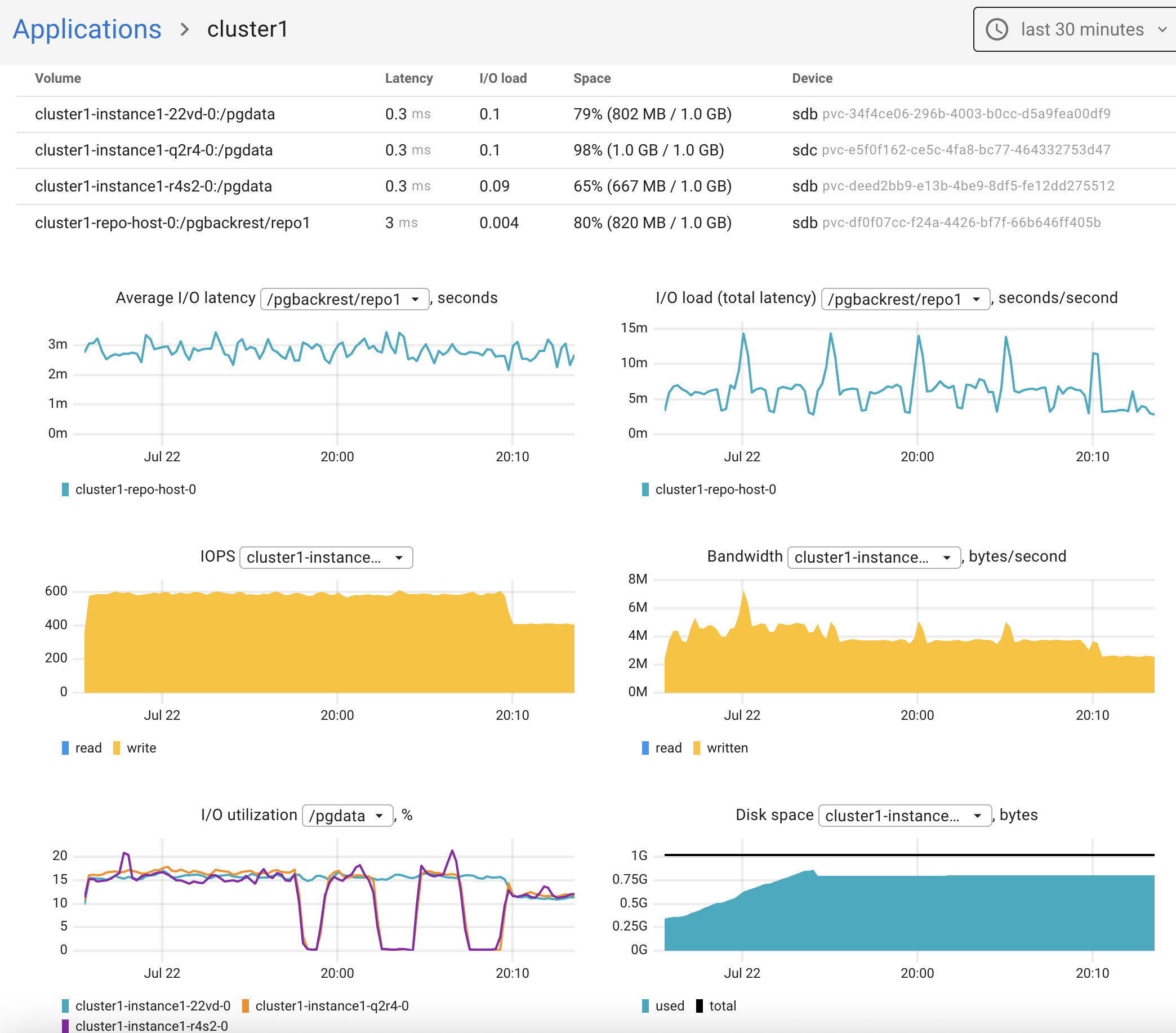

The PostgreSQL cluster dashboard offers several tabs:

- CPU

- Memory

- Storage

- Instances

- Logs

- Profiling

- Tracing

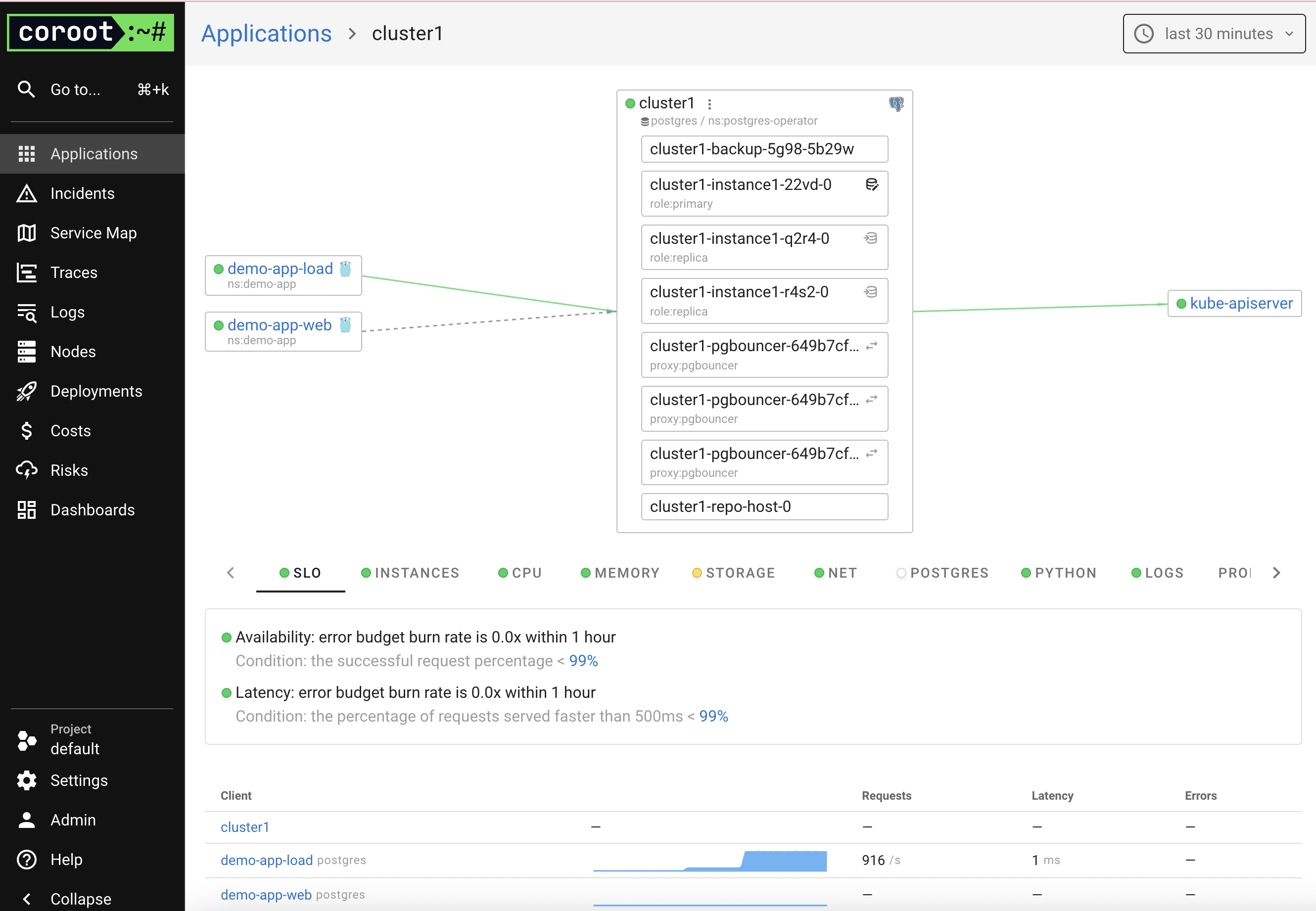

Coroot displays a visual map of service interactions — showing which app connects to the PostgreSQL cluster.

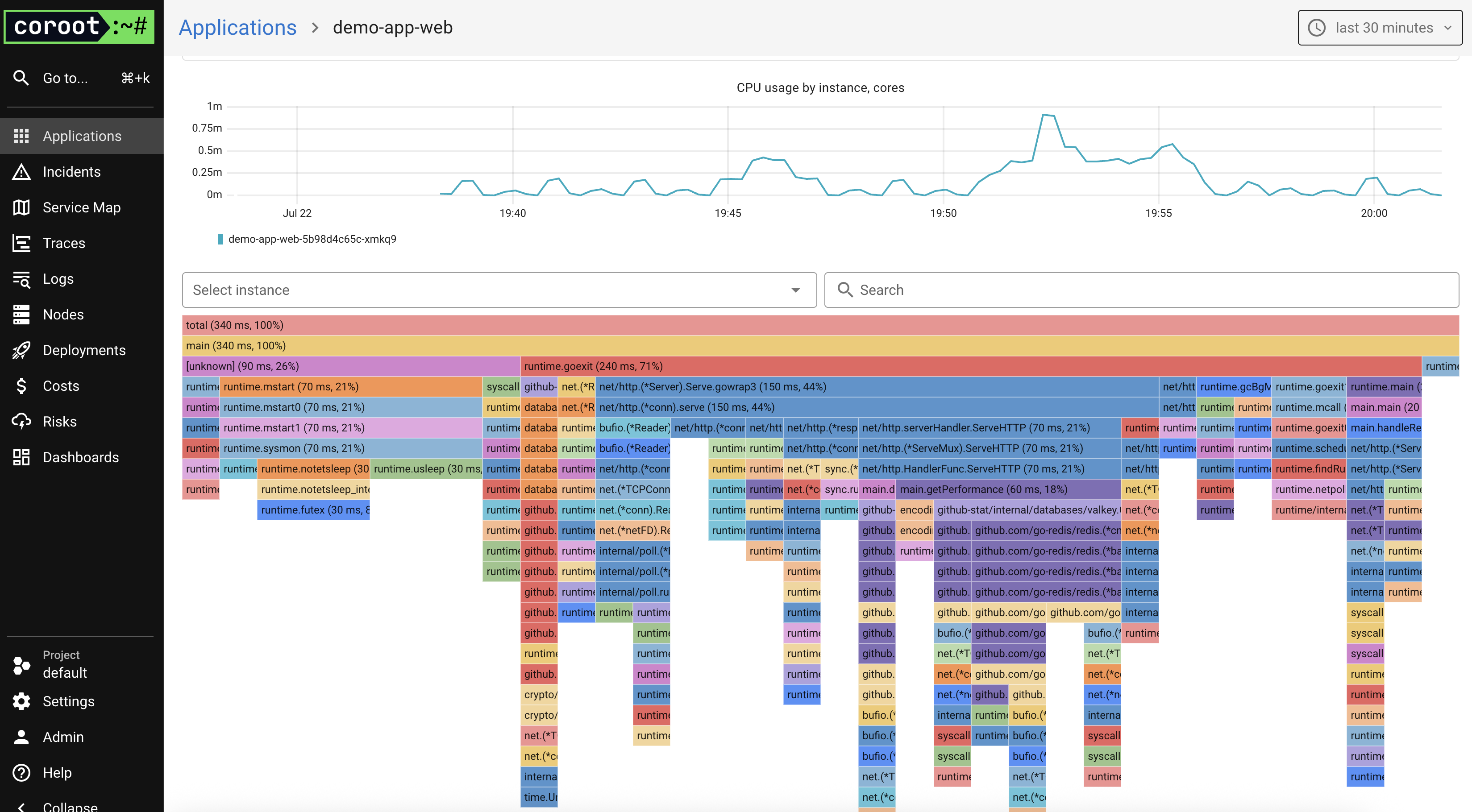

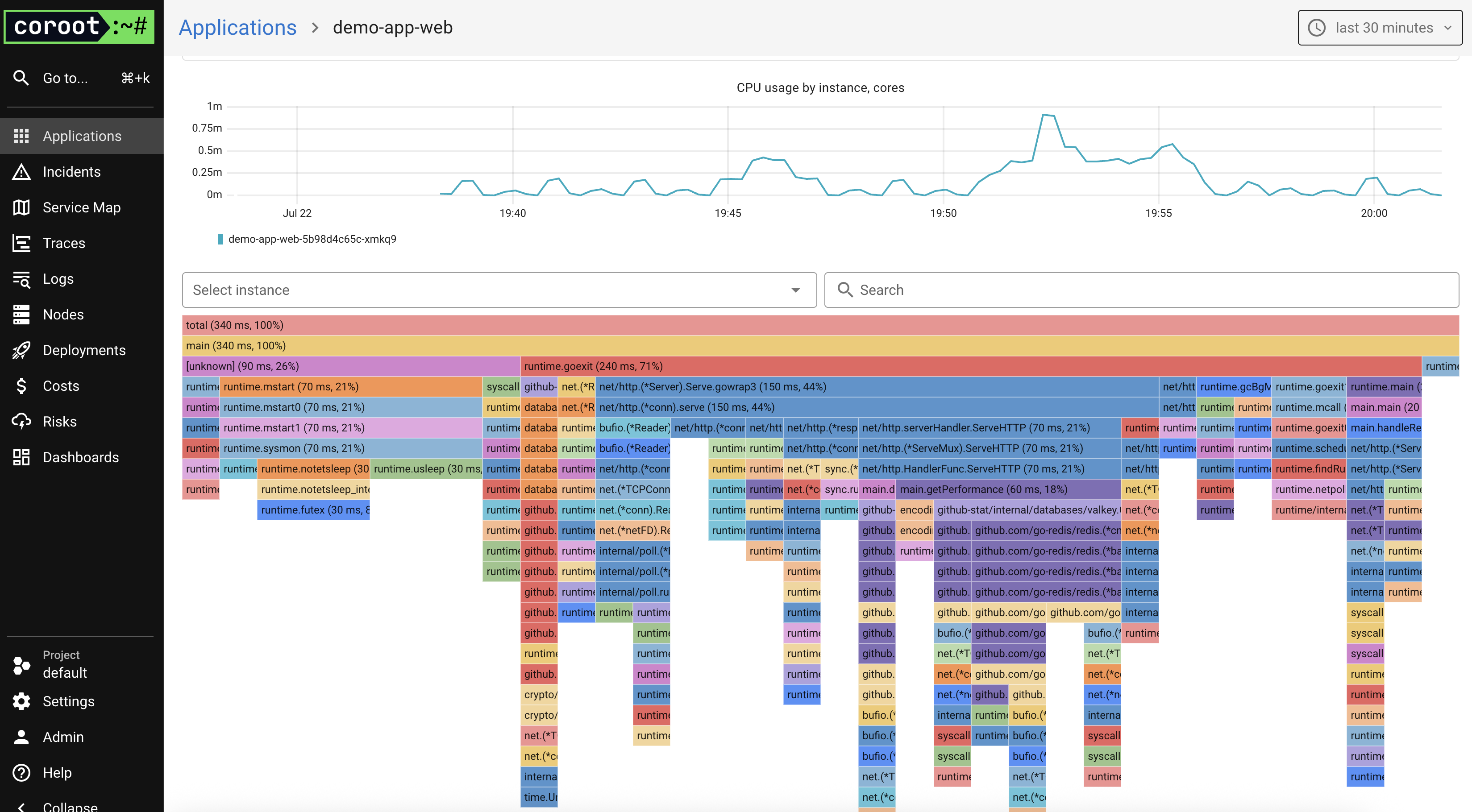

The Profiling tab looks excellent and intuitive. Here’s the Demo App profiling view:

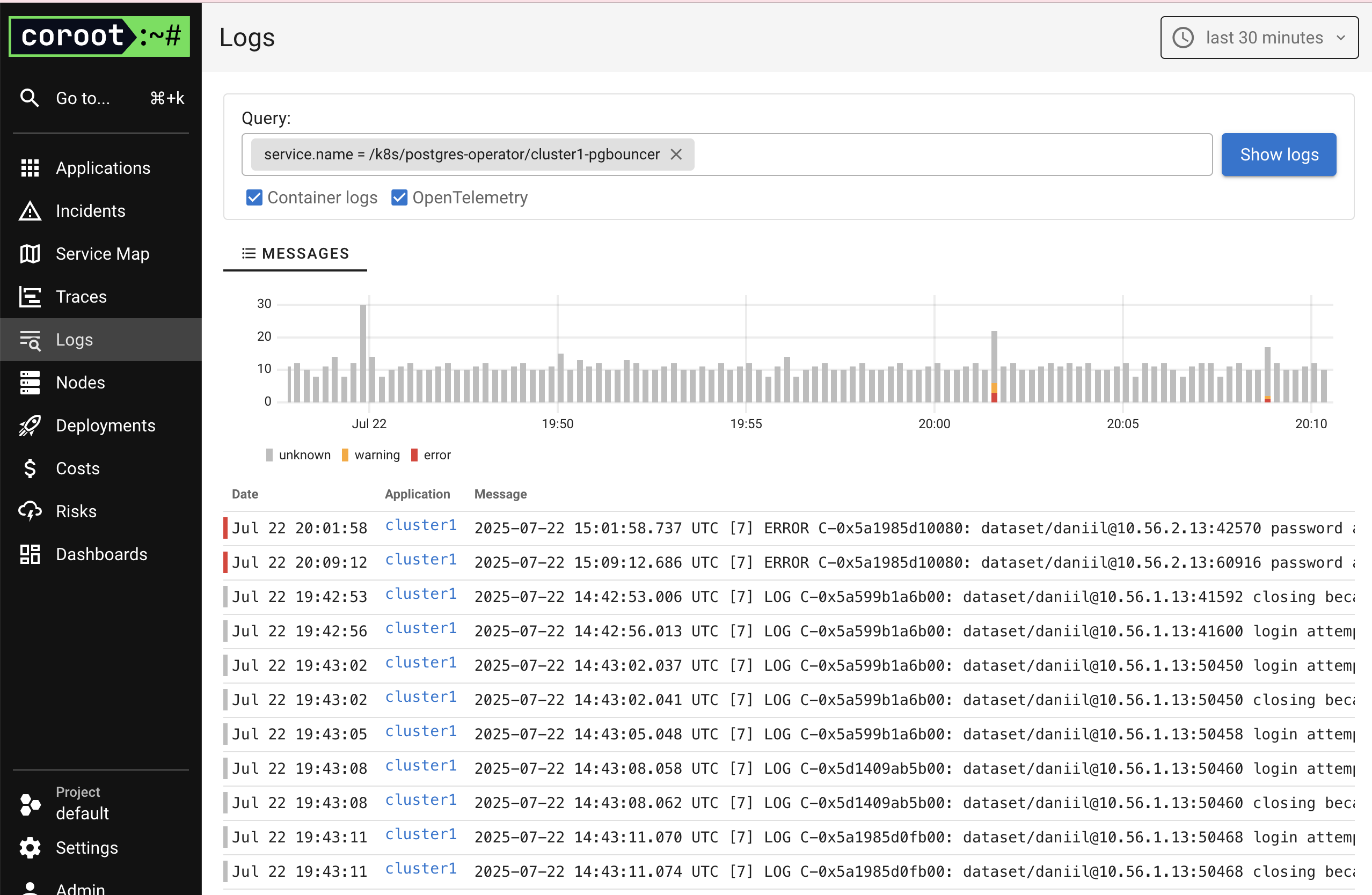

I also triggered an intentional error in the demo app.

Coroot correctly displayed it in both the home view and the app details page.

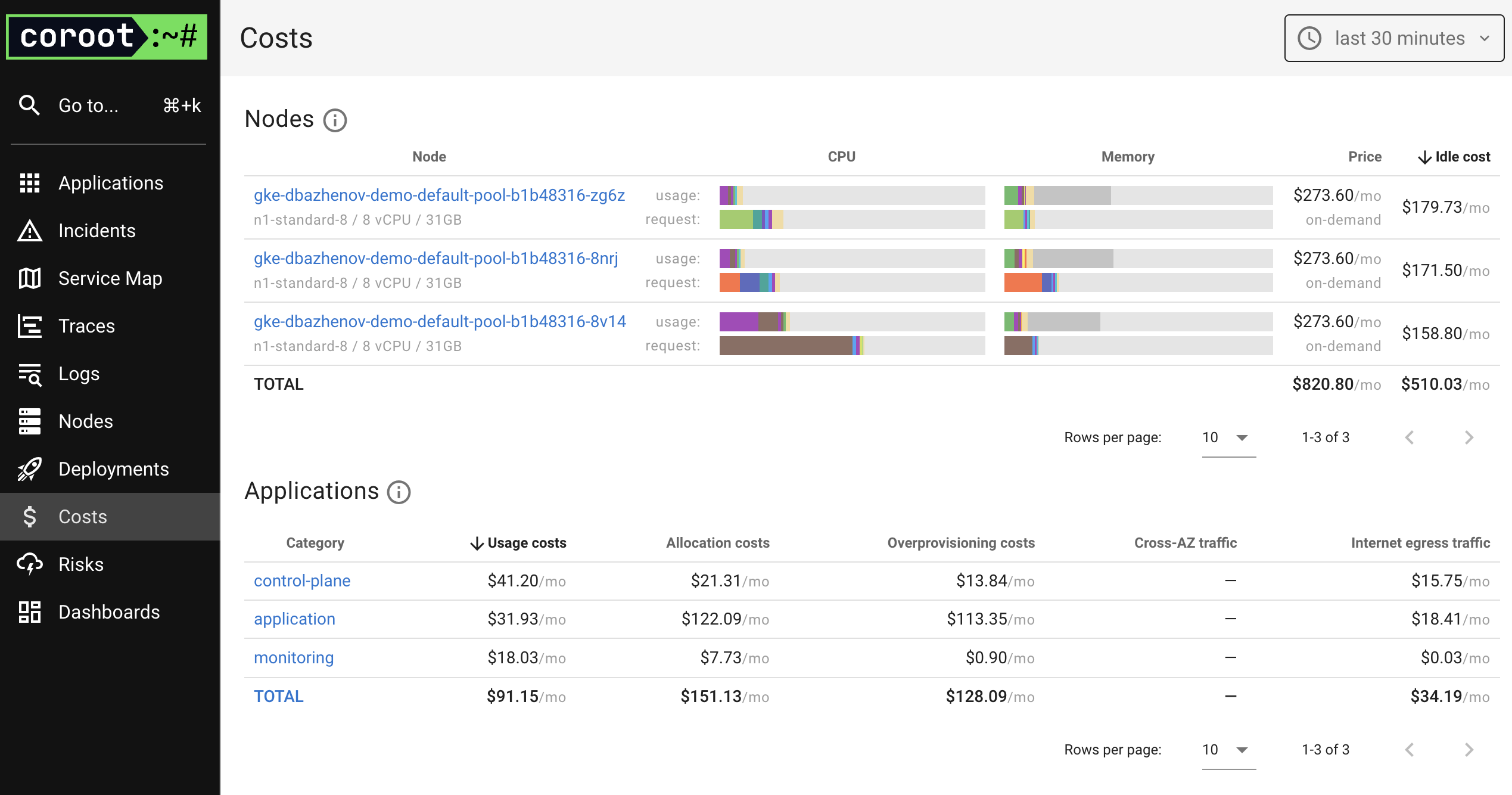

I especially liked the Logs and Costs sections in the sidebar — very well implemented.

First Incident: Storage Usage in PostgreSQL Turns Yellow

While exploring Coroot and the cluster, I increased the load on the PostgreSQL cluster using the Demo App.

After a short while, I noticed that the Postgres disk was full.

I opened the cluster details and went to the Storage tab.

By default, the cr.yaml file allocates just 1Gi of disk space — which is fine for a test setup.

Let’s increase disk size the GitOps way.

Increase Storage Size

Open the file postgres/cr.yaml and locate the section:

dataVolumeClaimSpec:

# storageClassName: standard

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1GiChange storage from 1Gi to 5Gi.

Note: Backup volumes (pgBackRest) are also enabled by default and set to 1Gi.

manual:

repoName: repo1

options:

- --type=full

# initialDelaySeconds: 120

repos:

- name: repo1

schedules:

full: "0 0 * * 6"

# differential: "0 1 * * 1-6"

# incremental: "0 1 * * 1-6"

volume:

volumeClaimSpec:

# storageClassName: standard

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1GiIncrease this storage to 5Gi as well.

Save changes to cr.yaml, then commit and push to the GitHub repository.

ArgoCD will automatically apply the changes. Pure GitOps magic.

Check the result in Coroot — everything looks great. The disk is increased to 5Gi and the issue is resolved.

Conclusion

We’ve installed and tested a solid monitoring tool, and it really makes a difference.

Across this 4-part series, we walked through the GitOps journey step by step:

Part 1 - Created a Kubernetes cluster, installed ArgoCD, and set up a GitHub repository.

Part 2 - Deployed a PostgreSQL cluster using Percona Operator for PostgreSQL.

Part 3 - Deployed a demo app via ArgoCD using Helm.

Installed and tested Coroot, an excellent open-source observability tool.

Managed the PG cluster through GitHub and ArgoCD — scaled replicas, created users, resized volumes, configured access, and more.

Thank you for reading — I hope this series was helpful.

The project files are available in my repository https://github.com/dbazhenov/percona-argocd-pg-coroot

I’d love to hear your questions, feedback, and suggestions for improvement. ∎

Discussion

We invite you to our forum for discussion. You are welcome to use the widget below.