In this post, I provide an illustration of how to use Pentaho Data Integration (PDI) tool to set up external dictionaries in MySQL to support ClickHouse. Although I use MySQL in this example, you can use any PDI supported source.

ClickHouse

ClickHouse is an open-source column-oriented DBMS (columnar database management system) for online analytical processing. Source: wiki.

Pentaho Data Integration

Information from the Pentaho wiki: Pentaho Data Integration (PDI, also called Kettle) is the component of Pentaho responsible for the Extract, Transform and Load (ETL) processes. Though ETL tools are most frequently used in data warehouses environments, PDI can also be used for other purposes:

- Migrating data between applications or databases

- Exporting data from databases to flat files

- Loading data massively into databases

- Data cleansing

- Integrating applications

PDI is easy to use. Every process is created with a graphical tool where you specify what to do without writing code to indicate how to do it; because of this, you could say that PDI is metadata oriented.

External dictionaries

You can add your own dictionaries from various data sources. The data source for a dictionary can be a local text or executable file, an HTTP(s) resource, or another DBMS. For more information, see “Sources for external dictionaries”. ClickHouse:

- Fully or partially stores dictionaries in RAM.

- Periodically updates dictionaries and dynamically loads missing values. In other words, dictionaries can be loaded dynamically.

The configuration of external dictionaries is located in one or more files. The path to the configuration is specified in the dictionaries_config parameter. Dictionaries can be loaded at server startup or at first use, depending on the dictionaries_lazy_load setting. Source: dictionaries.

Example of external dictionary

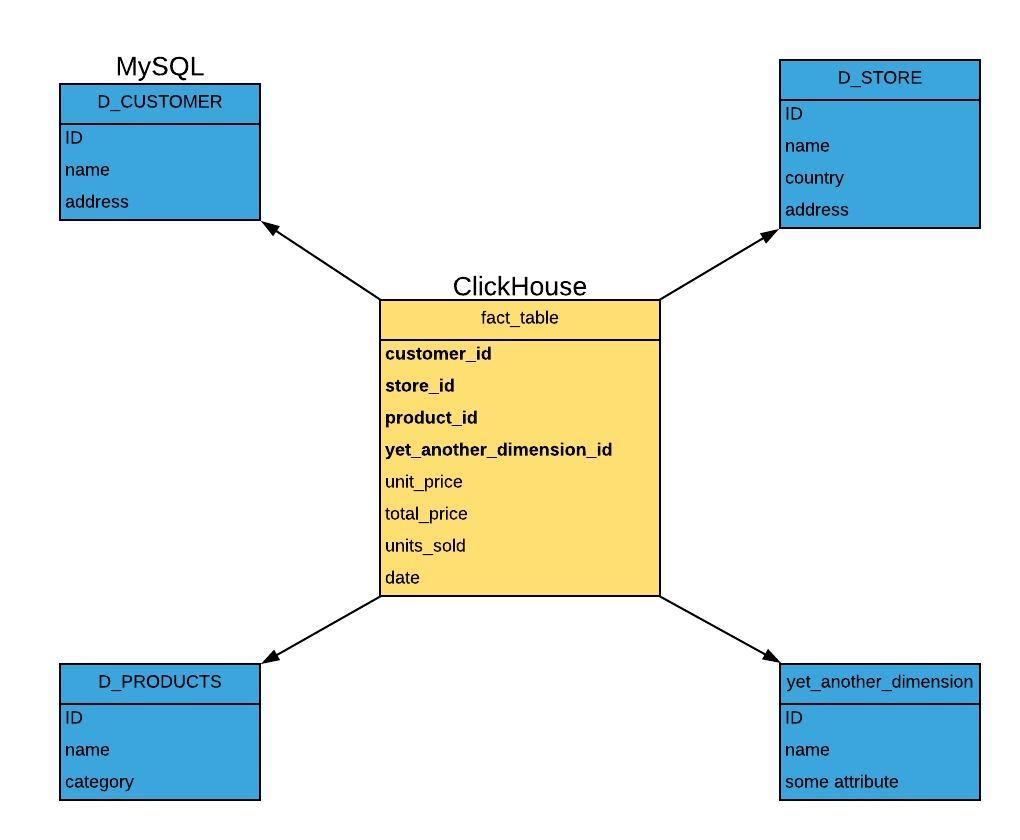

In two words, dictionary is a key(s)-value(s) mapping that could be used for storing some value(s) which will be retrieved using a key. It is a way to build a “star” schema, where dictionaries are dimensions:

If you need a JOIN for joining with dimension tables (these are relatively small tables that contain dimension properties, such as names for advertising campaigns), a JOIN might not be very convenient due to the bulky syntax and the fact that the right table is re-accessed for every query. For such cases, there is an “external dictionaries” feature that you should use instead of JOIN. For more information, see the section “External dictionaries”.

Main point of this blog post:

Demonstrating filling a MySQL table using PDI tool and connecting this table to ClickHouse as an external dictionary. You can create a scheduled job for loading or updating this table.

Filling dictionaries during the ETL process is a challenge. Of course you can write a script (or scripts) that will do all of this, but I’ve found a better way. Benefits:

- Self-documented: you see what exactly PDI job does;

- Easy to modify(see example below)

- Built-in logging

- Very flexible

- If you use the Community Edition you will not pay anything.

Pentaho Data Integration part

You need a UI for running/developing ETL, but it’s not necessary to use the UI for running a transformation or job. Here’s an example of running it from a Linux shell(read PDI’s docs about jobs/transformation):

${PDI_FOLDER}/kitchen.sh -file=${PATH_TO_PDI_JOB_FILE}.kjb [-param:SOMEPARAM=SOMEVALUE]

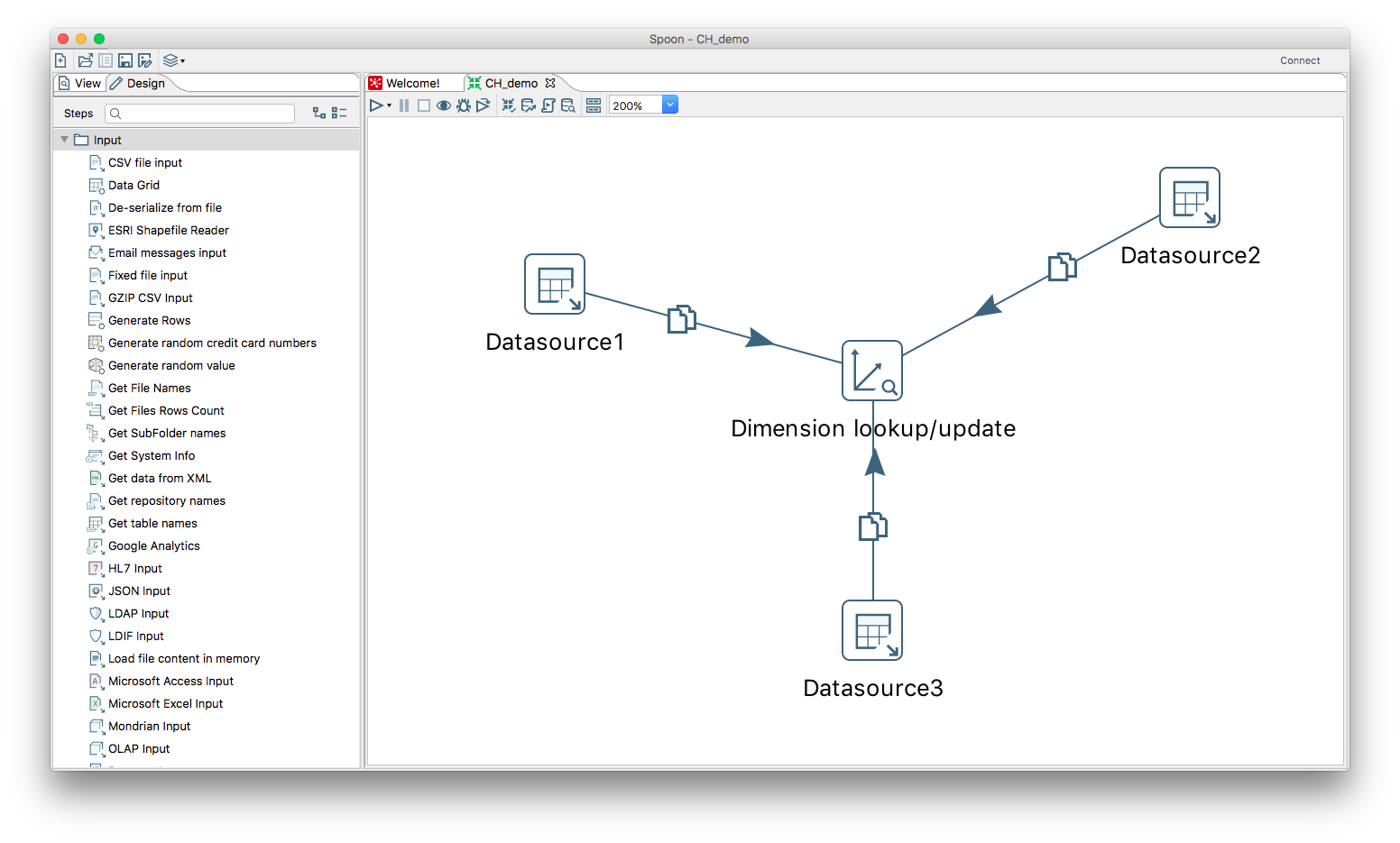

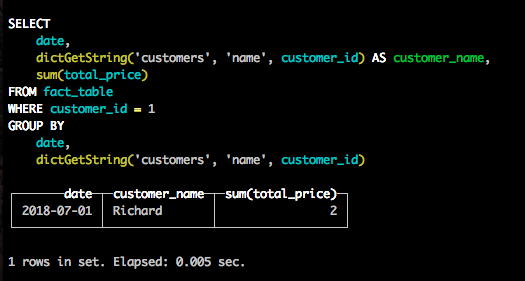

${PDI_FOLDER}/pan.sh -file=${PATH_TO_PDI_TRANSFORMATION_FILE}.ktr [-param:SOMEPARAM=SOMEVALUE]Here is a PDI transformation. In this example I use three tables as a source of information, but you can create very complex logic:

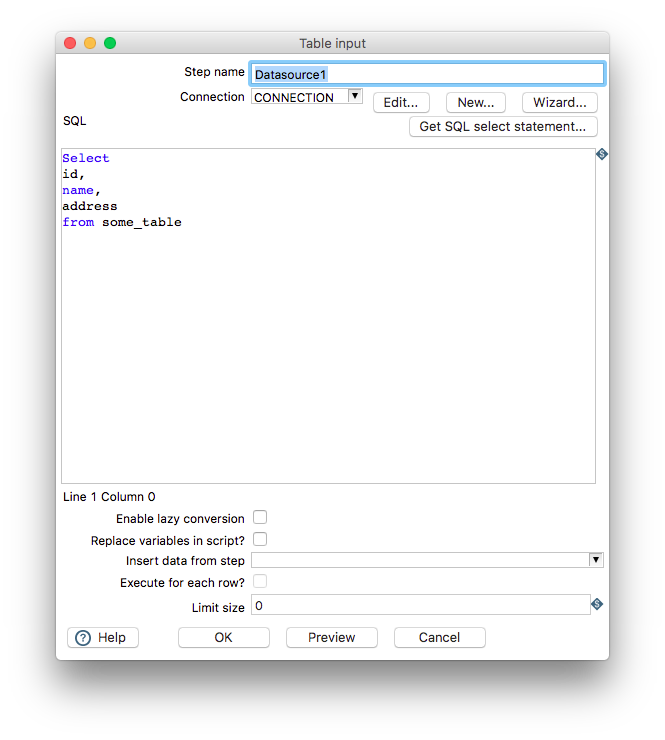

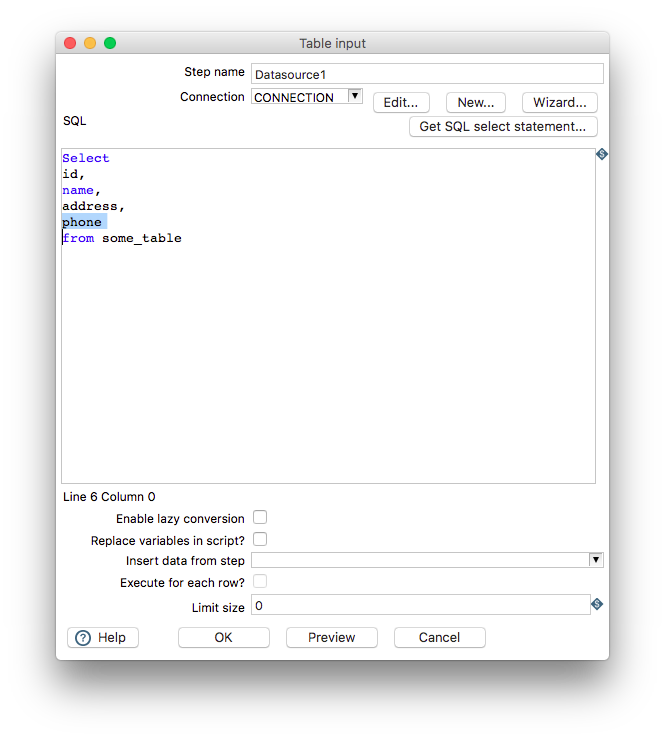

“Datasource1” definition example

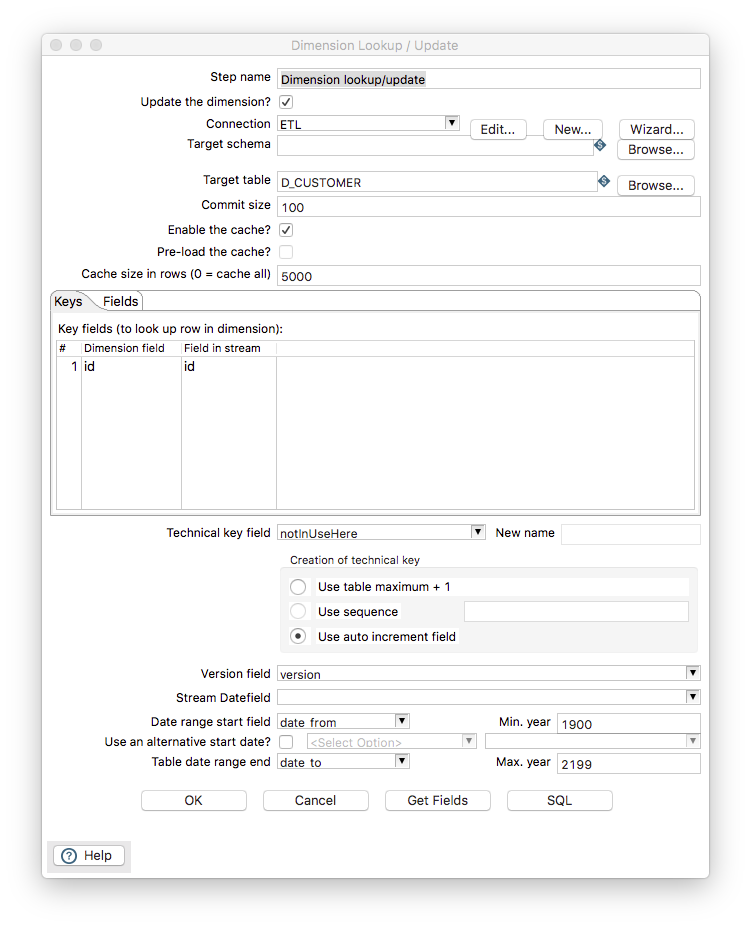

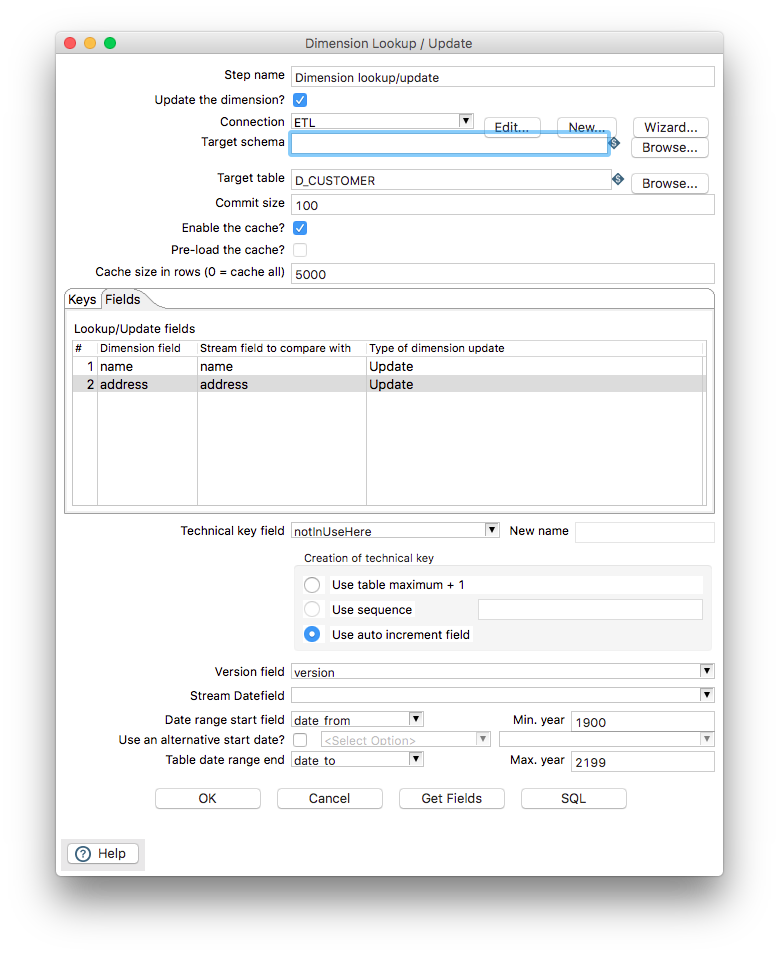

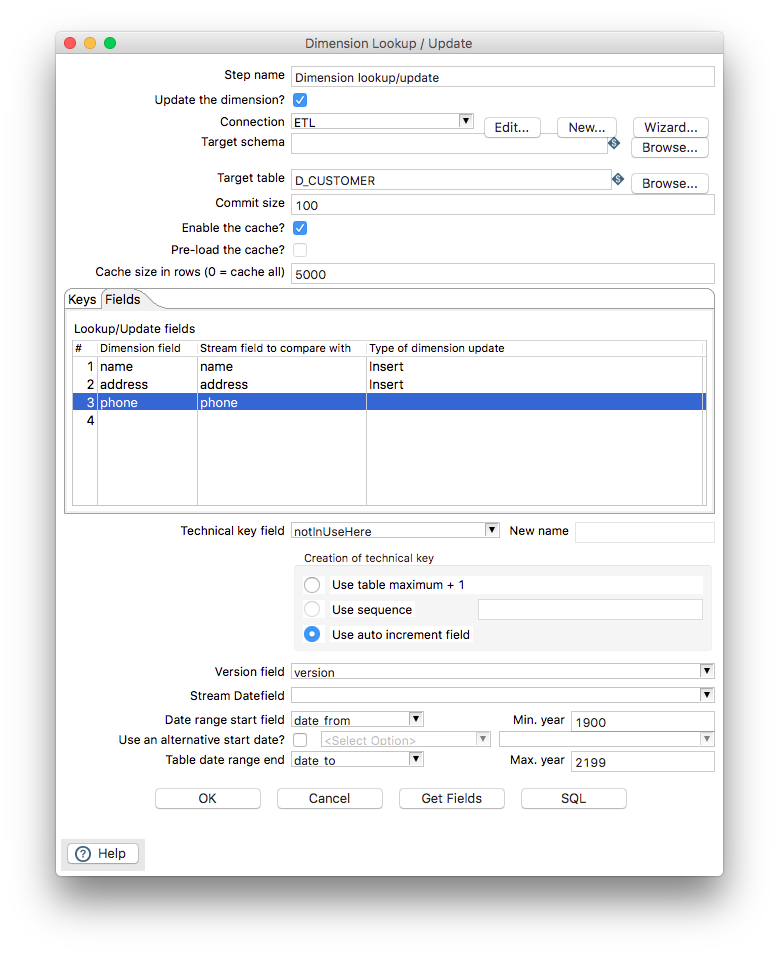

Dimension lookup/update is a step that updates the MySQL table (in this example, it could be any database supported by PDI output step). It will be the source for ClickHouse’s external dictionary:

Fields definition:

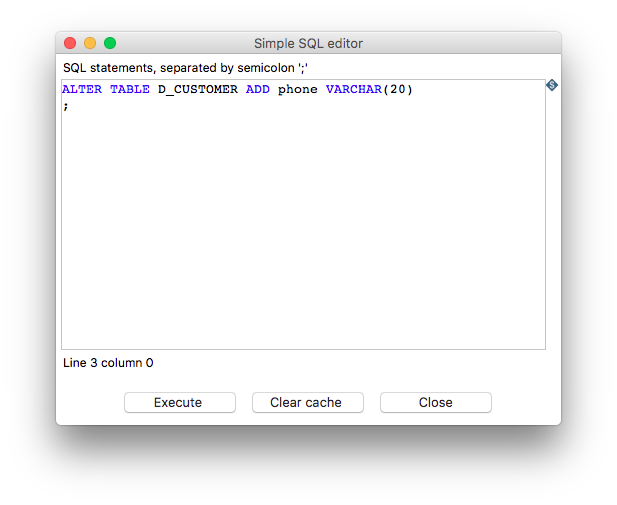

Once you have done this, you hit the “SQL” button and it will generate the DDL code for D_CUSTOMER table. You can manage the algorithm of storing data in the step above: update or insert new record(with time_start/time_end fields). Also, if you use PDI for ETL, then you can generate a “technical key” for your dimension and store this key in ClickHouse, this is a different story… For this example, I will use “id” as a key in the ClickHouse dictionary.

The last step is setting up external dictionary in ClickHouse’s server config.

The ClickHouse part

External dictionary config, in this example you’ll see that I use MySQL:

<dictionaries>

<dictionary>

<name>customers</name>

<source>

<!-- Source configuration -->

<mysql>

<port>3306</port>

<user>MySQL_User</user>

<password>MySQL_Pass</password>

<replica>

<host>MySQL_host</host>

<priority>1</priority>

</replica>

<db>DB_NAME</db>

<table>D_CUSTOMER</table>

</mysql>

</source>

<layout>

<!-- Memory layout configuration -->

<flat/>

</layout>

<structure>

<id>

<name>id</name>

</id>

<attribute>

<name>name</name>

<type>String</type>

<null_value></null_value>

</attribute>

<attribute>

<name>address</name>

<type>String</type>

<null_value></null_value>

</attribute>

<!-- Will be uncommented later

<attribute>

<name>phone</name>

<type>String</type>

<null_value></null_value>

</attribute>

-->

</structure>

<lifetime>

<min>3600</min>

<max>86400</max>

</lifetime>

</dictionary>

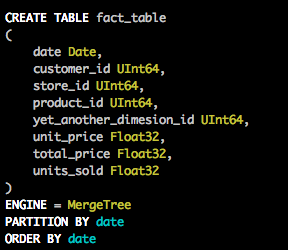

</dictionaries>Creating the fact table in ClickHouse:

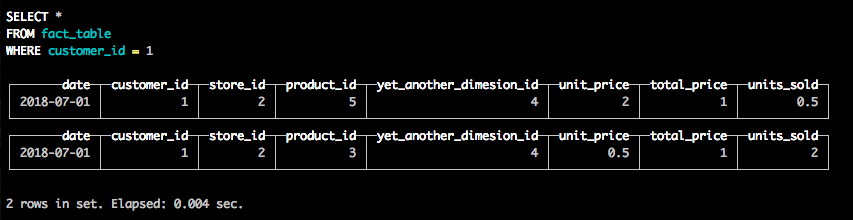

Some sample data:

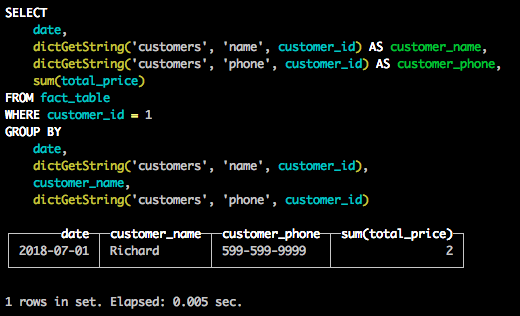

Now we can fetch data aggregated against the customer name:

Dictionary modification

Sometimes, it happens that you need to modify your dimensions. In my example I am going to add phone number to the “customers” dictionary. Not a problem at all. You update your datasource in PDI job:

Open the “Dimension lookup/update” step and add the phone field:

And hit the SQL button.

Also add the “phone” field in ClickHouse’s dictionary config:

<attribute>

<name>phone</name>

<type>String</type>

<null_value></null_value>

</attribute>ClickHouse will update a dictionary on the fly and we are ready to go—if not please check the logs. Now you can run the query without a modification of fact_table:

Also, note that PDI job is an XML file that could be put under version source control tools, so it is easy to track or rollback if needed. Please do not hesitate to ask if you have questions! ∎

Discussion

We invite you to our forum for discussion. You are welcome to use the widget below.